Review: NZXT DOKO

March 17, 2015doko,mediapc,tv,steam machine,nzxt,streaming,media,Living room,remote,1080pTech Reviews,Technology

When I was planning for this year’s CES (2015), NZXT told me they were setting up meetings, but only had one new product they’d be showing. They wouldn’t tell me what it was or what it did, only that I’d see it when I visited their suite.

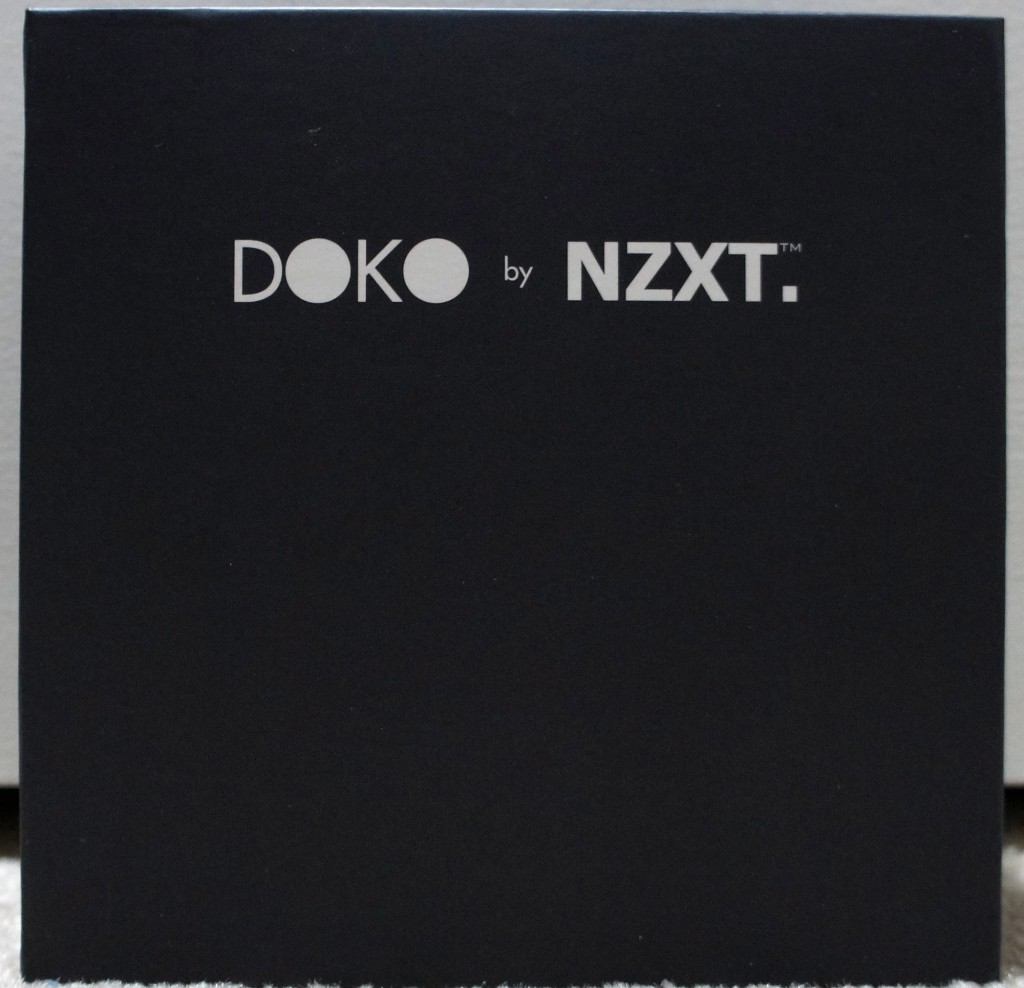

I arrived at NZXT’s suite and saw they had a television hooked up to a small black box, which had a keyboard, mouse, and gamepad attached to it. They simply told me “this is the DOKO”, to which I replied “err… what is a DOKO?”

The NZXT DOKO is a device designed for streaming a PC to a television, and is aimed at replacing the living room media PC.

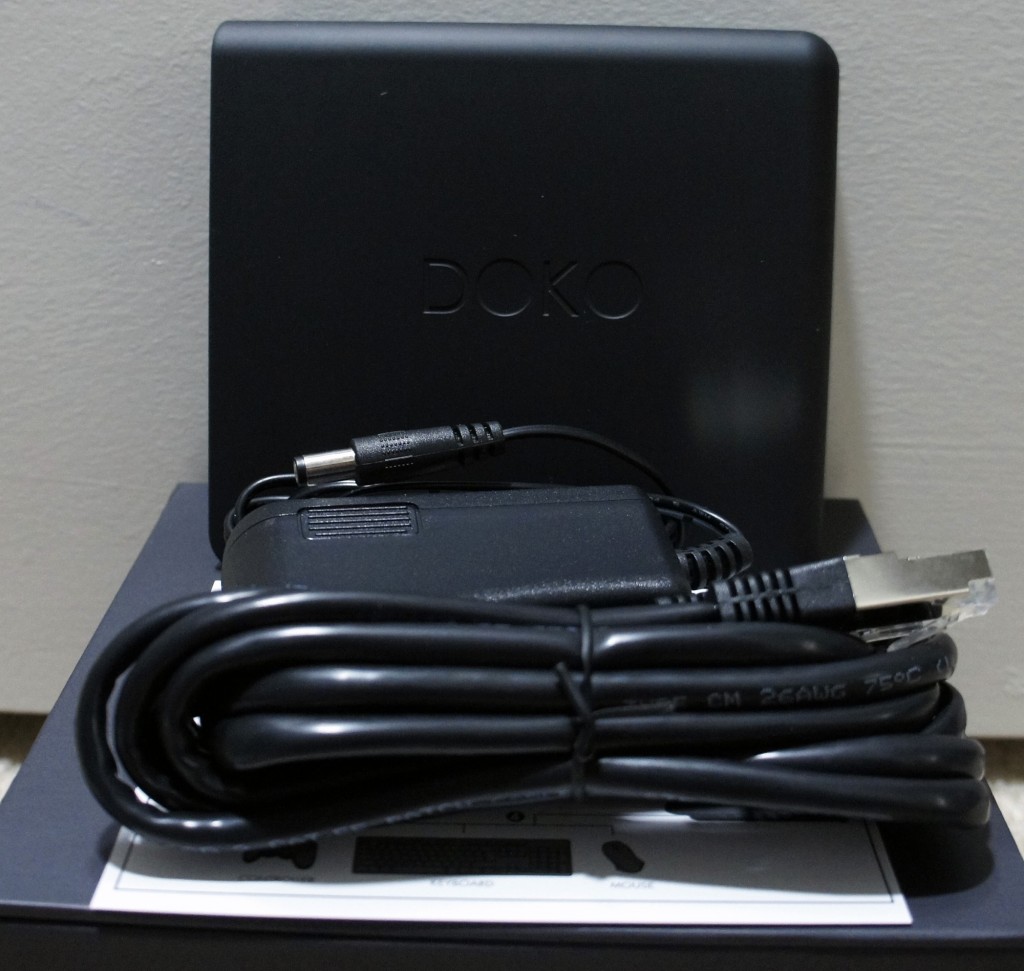

A small black box with a power button, HDMI out, 3.5mm audio out, Ethernet out, and 4x USB 2.0 in, the DOKO is a pretty sleek device. The specifications are below:

- Model Number – AC-DOKOM-M1

- Dimensions – W: 108mm H: 29mm D:121mm

- Weight – 0.32kg

- Included Accessories – Ethernet Cable, Power Cable

- CPU – Wonder Media 8750

- Memory –256MB DDR3

- Boot Storage – 8MB SPII Flash

- Network Connectivity – Gigabit Ethernet

- Video Output – HDMI 1.3 or higher

- Audio Output – Headphone Port

- Video Signal – 1080p @ 30FPS

- Power – 12V 2.5A DC Adapter

- Accessory Connection – 4x USB 2.0, USBOIP

- Materials – Rubberized Coating, ABS Plastic, PCB

- Color – Black

- UPC – 815671012241

- Warranty – 2 Years

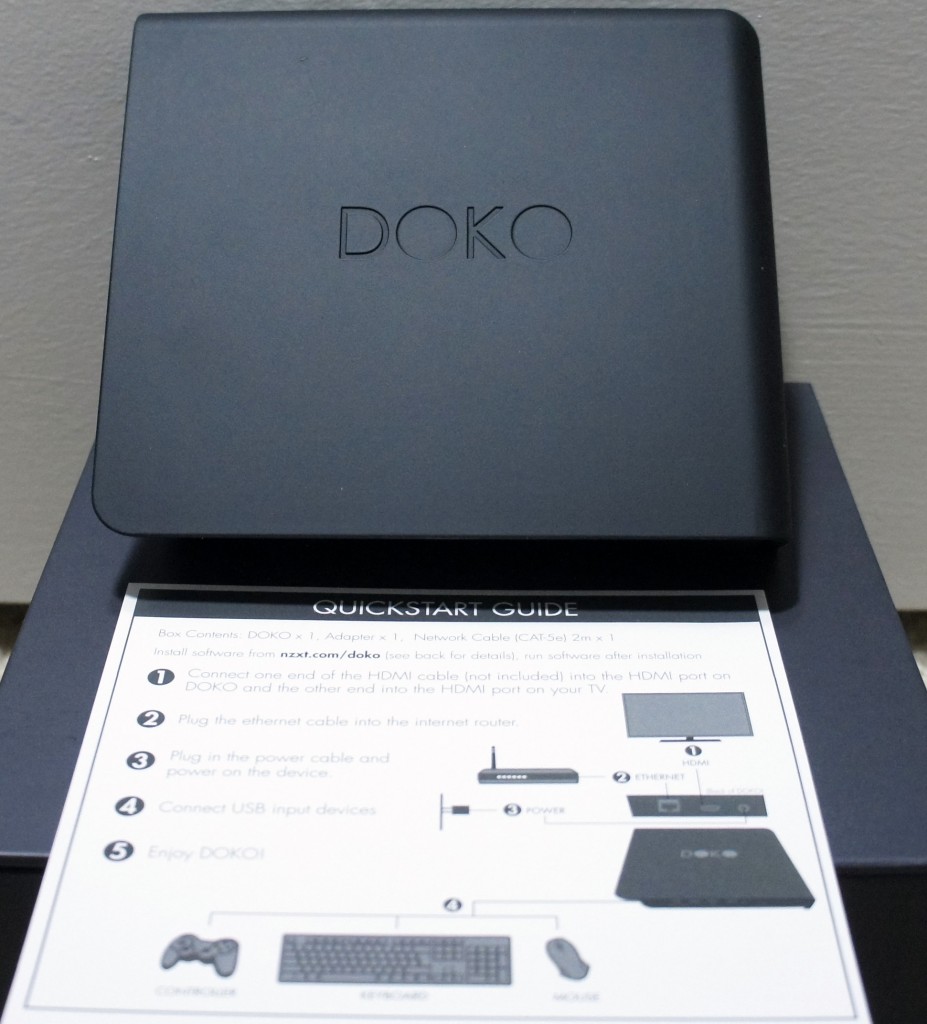

The way the device works is fairly straightforward – the DOKO is plugged into a TV via HDMI, into the router via Ethernet (NZXT opted to not include a Wi-Fi option to avoid potential latency/bandwidth issues, and presumably lessen costs), and a keyboard/mouse/gamepad/USB-powered car battery/etc. via USB.

Once the DOKO is set up, the next step is to install the DOKO software on any PC you wish to use with your DOKO. The software is available on NZXT’s website, and has a fairly straightforward installation. From then on, the software runs in the background (similarly to a PLEX server) and makes it so the PC is available for DOKO streaming. The software also allows you to set up a password required in order to connect the DOKO to your PC, which is handy for preventing roommates or family members from accessing your PC during inopportune occasions.

With the software running on the PC, and the DOKO booted up, all there is to do is select the desired PC and remote in. The host PC will automatically detect any USB device plugged into the DOKO. At that point, you can do anything you normally would on your PC through the DOKO.

Unfortunately, the DOKO is limited to only 30 frames per second, though it does so at 1080p. This is fine for most tasks it was designed for, including watching movies (except for the Hobbit movies, Peter Jackson disapproves of the DOKO’s disappointing framerate). When it comes to gaming, gameplay is surprisingly smooth and doesn’t really have any noticeable latency. However, gamers might object to the framerate, since after all, 30 FPS is only for people on last-gen consoles, playability be damned! To them I say, go back to your room and play the games there, or get the new Nvidia Shield for twice the price of the DOKO.

The NZXT DOKO is a great product. At $99, it certainly allows you to do a lot more than you’d be able to do with a media PC that costs even twice as much (assuming you have a solid PC at home already). The device is small, a breeze to set up, and easy to use. Personally, I’ve been using it in conjunction with Logitech’s K830 wireless keyboard, which makes the DOKO extremely convenient for couch use.

Overall, I’d recommend the DOKO to anyone looking to get a living room PC of any sort, as long as they have a solid PC elsewhere in the household that they can stream from.

Review: AVerMedia CV710 ExtremeCap U3 Capture Card

March 7, 2015hdmi,cv710,60 fps,u3,usb3,capture,gaming,usb 3,capture card,avermedia,1080p,extremecap,1080p60,extremecap u3,games,usb 3.0,card,gameTech Reviews,Technology

When Michael presented the idea to me of creating videos for the gaming section of Not Operator, I thought it would be a good idea. I told him it would be easy to stream and capture PC games, but our only option for Xbox One would be using Twitch.tv, and we wouldn’t have any way to capture Wii U gameplay. He suggested we get ourselves a game capture card for review.

Having never looked into the world of capture cards before, I didn’t really know what I was looking for, and I didn’t know any of the major brands that create capture cards other than Hauppauge (which is known for their TV Tuner cards anyways).

I began researching products, looking up reviews, and checking forums to see what the most commonly recommended capture cards were. What I found was that many existing capture cards have been on the market for a few years already; very few came out within the last year. The side effect of this is that many capture cards have a variety of limitations, such as not being able to capture 1080p video at higher than 30 frames per second, the inability to capture sound, or requiring some weird mess of cables to get working. This includes capture cards up into the $300 range.

Soon after, I discovered that many people suggested some Japanese brand capture cards that would have to be imported to the US, and still wouldn’t even capture audio. I thought this was a strange thing. Considering how popular game streaming and Let’s Play videos are on Youtube, I would’ve expected a variety of well-known companies to have entered the capture card market.

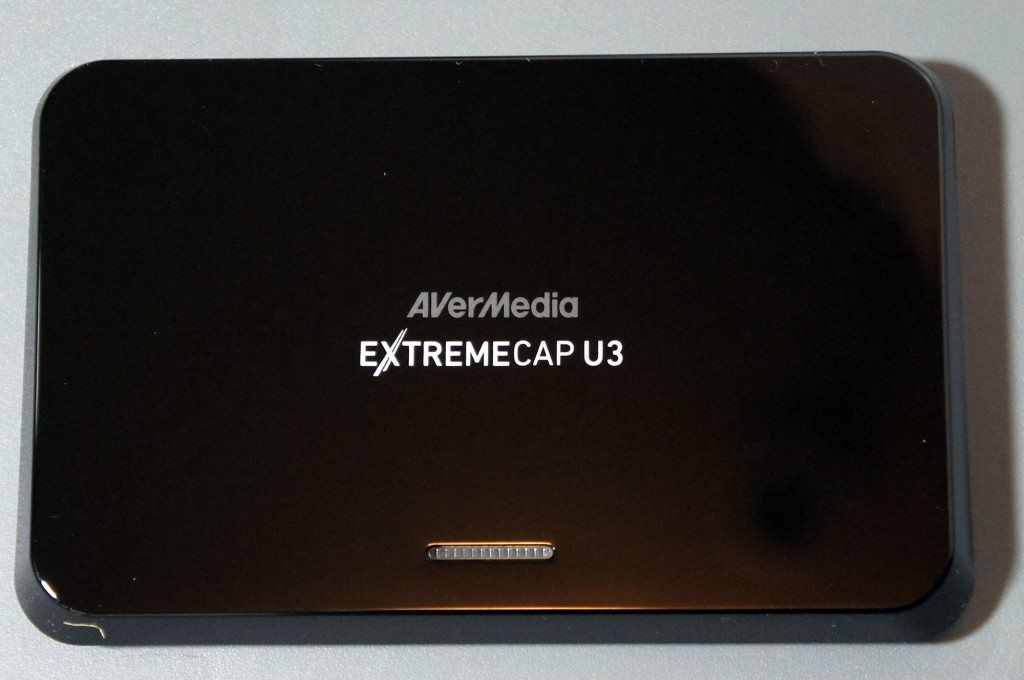

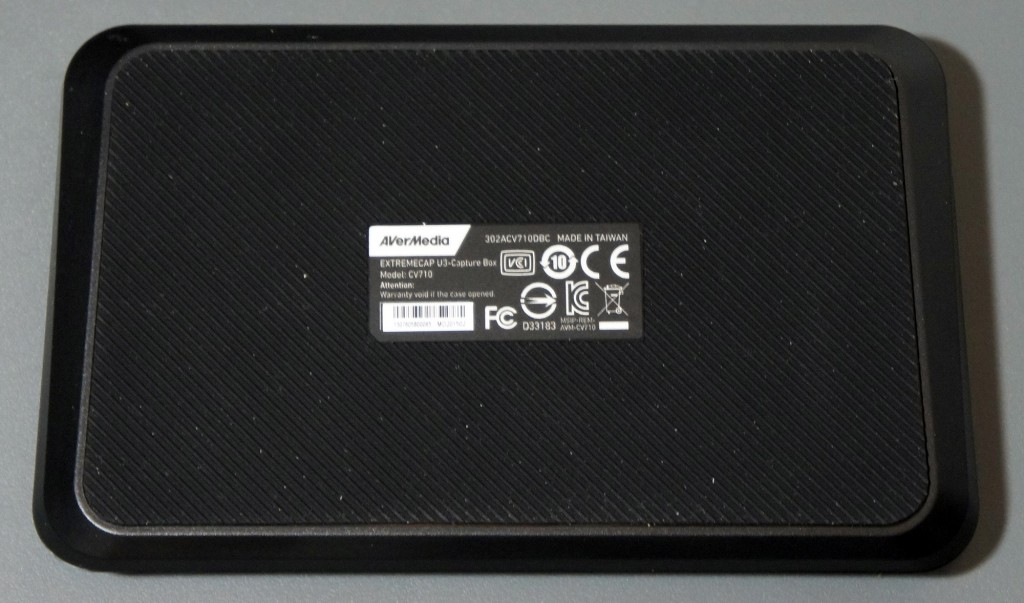

In any case, after researching for a while longer, I settled on the AVerMedia CV710 ExtremeCap U3 capture card. The ExtremeCap U3 released in late September 2013, and promised features such as 1080p game capture at 60 frames per second, and audio capture (including the ability to capture audio from a microphone attached to the PC), all in a tiny form factor that is ridiculously easy to set up and use as it relies only on a USB 3.0 connection to the PC. AVerMedia was gracious enough to send one for us to review.

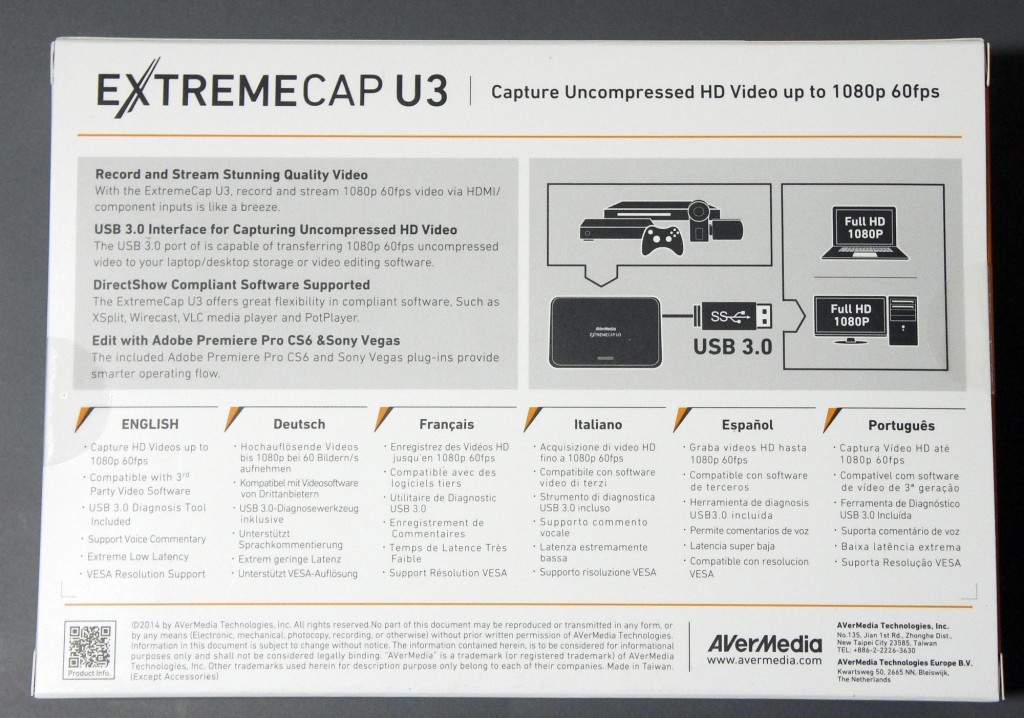

Here are the specs for the CV710 ExtremeCap U3:

Specifications –

- Input:

- HDMI

- Component

- Recording Format:

- MP4 (H.264, AAC)

- Chroma Subsampling:

- YUV 4:2:2

Applications –

- Bundled Software:

- AVerMedia RECentral

- USB 3.0 Diagnosis Tool

- 3rd Party Plug-ins:

- Adobe Premiere Pro CS6

- Sony Vegas Pro

- Compatible Software:

- DirectShow compliant software: e.g., XSplit, OBS, AmaRecTV, Potplayer

System Requirements for PC –

- For FHD 1080p 60 FPS recording (H.264):

- Desktop:

- CPU – Intel® Core™ i5-3400(Ivy Bridge) or above

- Graphics Card – NVIDIA GT630 or above

- RAM – 4 GB

- Laptop:

- CPU – Intel® Core™ i7-3537U 2.0 GHz(Ivy Bridge) or above

- Graphics Card – NVIDIA GT 735 M or above

- RAM – 4 GB

- For HD 720p 60 FPS recording (H.264):

- Intel® Core™ i3-3200 series with Ivy bridge platform and 4 GB RAM

- Intel chipset with native USB 3.0 host controller (Can be used together with these certified chipsets: Renesas, Fresco, VIA, ASMedia)

- Power requirement: USB 3.0 power

- Graphics Card: DirectX 10 compatible

- Windows 8.1 / 8 / 7 (32 / 64 bit)

- Desktop:

System Requirements for Mac –

- OS: Mac OS X v10.9 or later

- CPU: i5 quad-core or above for FHD 1080p 60 FPS recording (H.264)

- Power requirement: USB 3.0 power

- RAM: 4 GB or more

- HDD: At least 500 MB of free space

In the package –

- ExtremeCap U3 (Weight: 182g)

- Quick Installation Guide

- USB 3.0 cable

- Component Video / Stereo Audio Dongle Cable

The ExtremeCap U3 was incredibly easy to set up. First, I plugged the device into a USB 3.0 port on the computer. Next, I installed the latest software and drivers for Windows (since the PC used was running Windows 8.1). Interestingly enough, all the downloads on AVerMedia’s AP & Driver page have a counter for how many times each file has been downloaded. Considering the driver and software package we needed has been downloaded approximately 2000 times, it seems like we’re in a fairly small group of people that have an ExtremeCap U3.

Once that was done, we opted to run the bundled USB 3.0 Diagnosis Tool which checks to make sure that the USB 3.0 chipset on the computer is compatible with the ExtremeCap U3. Our system is running on the Intel X79 chipset, which lacks native USB 3.0 support, but the Fresco FL1009 powered USB 3.0 ports on our Gigabyte X79-UD7 were deemed fully compatible with the ExtremeCap U3.

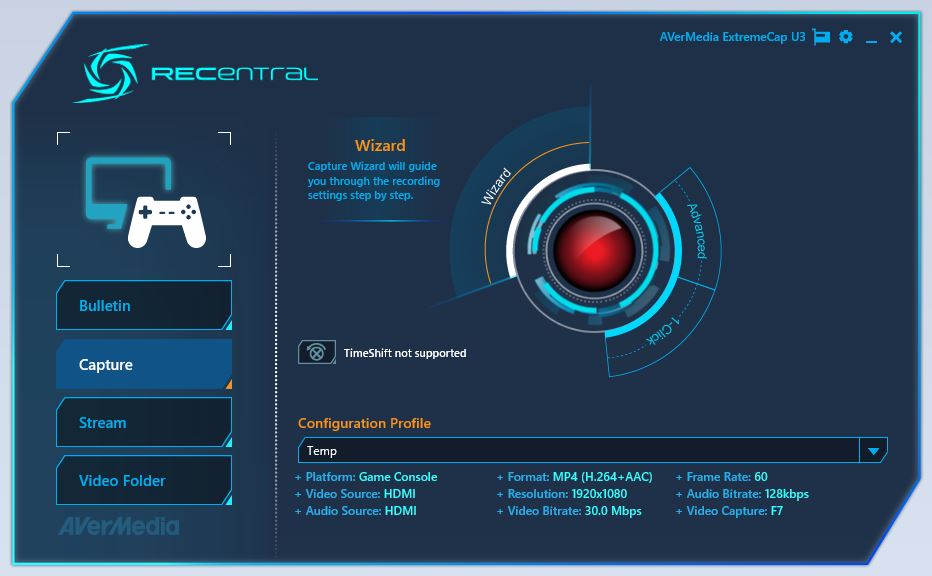

We plugged our Wii U into the ExtremeCap U3 using an HDMI cable, and fired up the AVerMedia RECentral software. The menu that greeted us was fairly straightforward, giving us the option to choose whether we wanted to capture video or stream; and in both cases we could opt to use the Wizard to walk us through setting up our options. Alternatively, we could choose the 1-Click setting option which would automatically choose the recommended settings, or pick the Advanced setting option to be able to manually set everything up.

We went through the Wizard and used the 1-Click option, both of which worked flawlessly, but we preferred to choose our own settings manually to be able to tweak them, so we ended up using the Advanced settings option for our recording.

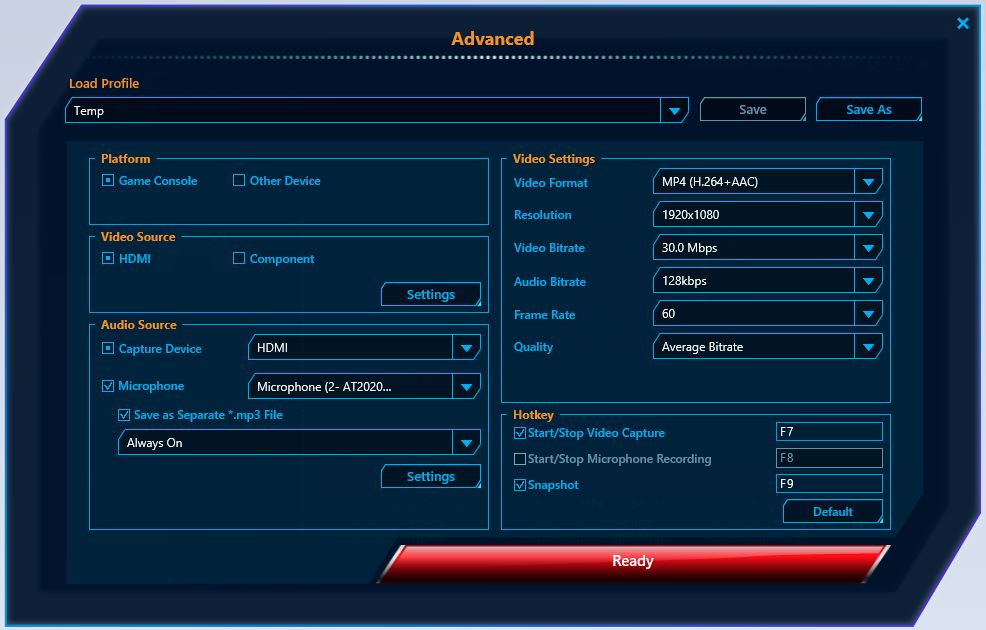

In the Advanced menu, we could tweak a variety of settings. The most notable options were the video format, resolution, framerate, and bitrate. We also could opt to record audio from a microphone connected to the PC and save it in the same file or as a separate mp3, which we found to be exceptionally useful for our video.

Once the settings were chosen, we were ready to go. We hit the ‘Ready’ button on the menu, and got to playing. Recording was a simple operation, it was just started/stopped with the press of a single button or desired key combination.

We found the recording quality to be exceptionally good. We opted to capture 1080p video with a 60 FPS framerate, an audio bitrate of 128 Kbps (since we were recording in stereo, that ends up being a bitrate of 256 Kbps), and a video bitrate of 30 Mbps. Our video bitrate was probably a fair bit higher than what’s necessary to record even at that resolution and framerate, but since our setup includes a 4x4TB RAID 10 array (8TB effective size), we weren’t too concerned about being frugal with our hard drive space.

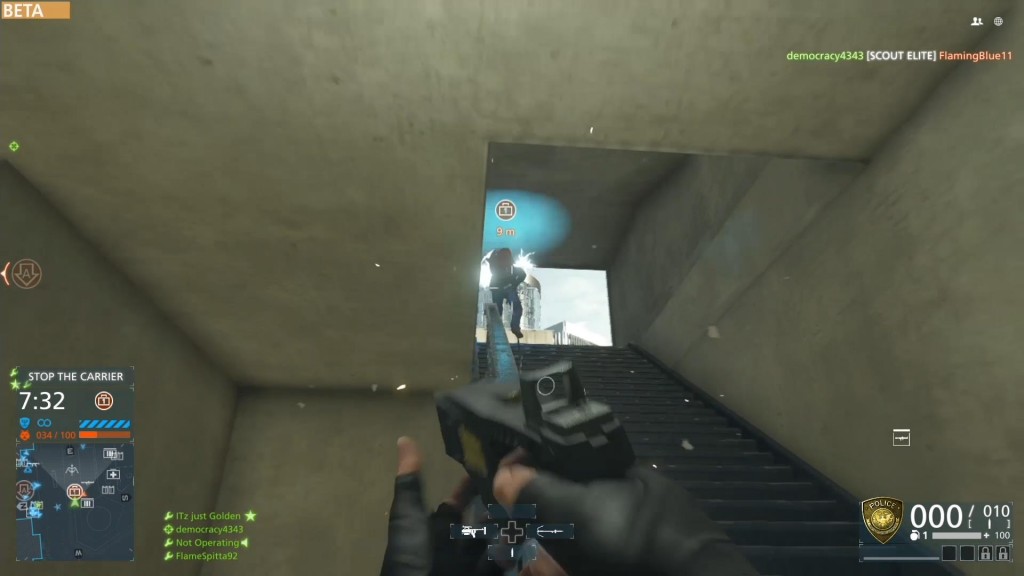

While recording our first episode of Digital Ops (where we played the Battlefield Hardline Beta), we started out recording using the Xbox One’s Twitch.tv application, and then switched to the ExtremeCap U3. The difference in quality was staggering, as can be seen in the screenshots below.

To see how video recorded with the ExtremeCap U3 looks, check out Episode 1 of Digital Ops here. We recommend viewing it using Google Chrome, as Youtube allows for 1080p video to be played at 60 FPS when accessed using Chrome.

Overall, we came away extremely impressed with the AVerMedia CV710 ExtremeCap U3 capture card. The build quality feels great, it’s small and fairly portable, it’s extremely easy to set up and use, and the software is fantastic. At a retail price point that is currently hovering around $170, it’s not the cheapest card on the market, but it certainly cannot be beaten on features or ease of use. We’d have no hesitation in recommending the ExtremeCap U3 to anyone looking to buy a capture card.

Battlefield Hardline – Or Flatline?

March 7, 2015shooter,battlefield hardline,cops,bf4,gaming,police,ea,battlefield,field,visceral,beta,fps,battlefield hardline beta,games,robbers,bfh,battle,dice,hardlineGaming,Gaming Analysis

I’ll admit it. I am a bit biased when it comes to the Battlefield series. Playing Battlefield 2 on PC opened my eyes to the world of large-scale combat in an FPS, in all of its dolphin-diving, C4-chucking glory. Not only was I drawn in by the stunning graphics and beautifully designed multiplayer maps, but I could not get enough of the freedom (and subsequently, chaos) that the multitude of weapons, vehicles, and gadgets at my disposal allowed. Unfortunately, these large-scale battles contribute to perhaps the biggest complaint gamers have with Battlefield: the maps are too big and the matches last too long, which leads to boring gameplay experiences. With the imminent release of Battlefield Hardline, a cops and robbers twist on the typical military warfare that the Battlefield series is known for, EA’s Visceral Games look to combat that criticism by developing “The Fastest Battlefield Ever.”

Multiplayer

As mentioned above, Battlefield Hardline pits cops against robbers at the center of the conflict this time around. While it might be a bit of a stretch to picture hordes of law enforcement officers and criminals waging war in the streets of Los Angeles, the change in setting led the way for some new features to be introduced in the game. First and foremost, player movement speed has been increased from previous Battlefield games. This modification, in addition to the inclusion of faster cars and motorcycles, was designed to help speed up the pace of multiplayer matches and get players back into the action quicker. Gone will be the days of endless wandering to find combat, just to get picked off along the way and be forced into doing it all over again.

In addition to new multiplayer modes like Heist and Hotwire, which you can check out highlights of in Not Operator’s first episode of Digital Ops here, Hardline added a slew of new weapons and gadgets. Hands down, my favorite weapon to use in the most recent multiplayer beta for Hardline, which came to a conclusion on February 9th, was the Taser. Despite the limited effective range, one direct hit on an enemy with the Taser will cause the poor soul unfortunate enough to be caught in your sights to convulse and light up like a human Christmas tree. More importantly, a direct hit with the Taser will count as a kill for you on the scoreboard, but will be considered a “non-lethal takedown.” If you manage to perform a non-lethal takedown on another player (the only other way to perform a non-lethal takedown is to successfully land a melee hit from behind), you will have a brief window to “interrogate” the target. Doing so will reveal the location of nearby enemies on your minimap, and as such, is a nifty little mechanic to have in the game.

Keeping up with the cops and robbers motif, instead of unlocking gear as you level up in previous Battlefield installments, Hardline will award you with cash for all of your in-game actions. Kills, captures, assists, and more will all net you with varying amounts of money which can then be spent on purchasing new guns, attachments, gadgets, melee weapons, and other customizable features such as weapon camos. Battlefield purists might be upset by this change, but the fact that all weapons and gadgets will be available for purchase from the get-go will help to put players on a more even playing field, and that is alright with me.

Levolution™, one of the best, and coincidentally hammiest, buzzwords of all time, makes its triumphant return in Hardline. First introduced in Battlefield 4, this mechanic alters the flow of a match by creating a dynamic gameplay change in a multiplayer map. For example, one of the two maps with ‘Levolution’ in the beta, Dust Bowl, set in a residential desert town (complete with meth lab), experiences a debilitating dust storm that impairs player vision and restricts the player’s line of sight. In the other beta map with ‘Levolution’, High Tension, players can cause a construction crane to crash down, bringing some chunks of buildings and a bridge with it. The mechanic is supposed to exist in every multiplayer map this time around, so I am curious to see the other ‘Levolutions’ Visceral came up with. Love it or hate it, ‘Levolution’ is a fun feature that switches up the typical gameplay experience, and I am glad it is making its way into Battlefield Hardline.

I also feel it is important to mention that while helicopters are returning in Hardline, jets will not be included in the game. Let’s be honest though… what sort of police department has a budget big enough for an F-18?

Single-Player

With the exception of the Bad Company games, the Battlefield series has not historically been known for the quality of their single-player campaigns (though I still believe the Battlefield 4 story was far better than Call of Duty Ghosts, which was released around the same time). DICE, the original developers behind the Battlefield series, passed the torch for Hardline on to Visceral Games, the studio that created one of my favorite horror games of all time, Dead Space. Nevertheless, many questions still surround Hardline’s single-player campaign, as not much has been seen from story mode aside from an entertaining 12 minute gameplay trailer released at Gamescom last August. As we have all become well aware of by now, trailers are one thing and the final product is another.

The campaign, which is being advertised as an engrossing “episodic crime drama” along the lines of Miami Vice, also promises a variety of gameplay options granting freedom over how to deal with enemy encounters. Want to handle every situation guns blazing? Go ahead. Prefer a more subtle touch? Grab a suppressed gun or a Taser and pick off the bad guys one by one on your way as you sneak to the objective. While this is nothing mind-blowingly cutting-edge for a video game, having the freedom to play to your preferred gameplay style helps to alleviate the linearity most FPS campaigns are known for. Considering that there is a talented development team behind the wheel, especially one that is particularly good at atmospheric storytelling, I am remaining optimistic that Battlefield Hardline’s story mode will not just be seen as a chore to get more achievements/trophies.

Should Hardline Have Just Been DLC?

The primary grievance gamers seem to have with Battlefield Hardline is that the game is being released as a $60 standalone game, whereas many feel that it should just be released as a downloadable expansion for Battlefield 4. Some Battlefield fans were even vocal enough to start a petition to try and make that happen, though it didn’t work. Another (deserved) criticism plaguing the Battlefield franchise in recent years is that the games have been riddled with glitches and bugs. The most recent installment in the series, Battlefield 4, launched with debilitating matchmaking, gameplay, and UI issues which basically rendered the game unplayable until the problems could be patched.

Looking to learn from their sins of the past, EA recently concluded the second beta test of Battlefield Hardline, which was comforting since Hardline was originally scheduled to launch on October 21st 2014. In an effort to try and win back some fan confidence, and possibly prevent a few lawsuits this time around, the game was delayed to 2015 in order to allow the developer more time to “push Hardline innovation further and make the game even better.” In addition to improving the quality of the game, this delay also helped to spread the gap between the release of Hardline and Battlefield 4, which came out at the end of October 2013. It is reassuring to see that EA is not forcing the Battlefield series into becoming an annual release franchise, like Call of Duty and Assassin’s Creed. This will hopefully more allow for more attention to be spent focusing on overall game quality rather than just pumping out the next iteration of a game to try and make a quick buck.

Battlefield will always hold a special place in my heart. I enjoyed my time playing in the multiplayer beta, and the story mode appears to be interesting. With no additional FPSs coming to market for a while, Hardline might be worth picking up. However, will Battlefield Hardline succeed in being the adrenaline shot to the heart the series that it is trying to be? Find out when the game launches on March 12th for EA Access members (Xbox One only), or March 17th for PC, Xbox One, PS4, Xbox 360, and PS3.

Review: Kingston HyperX Cloud II Headset

February 16, 2015headphones,kingston,hyper x,cloud ii,notoperator,headset,not operator,microphone,hyperx,cloud,notop,cloud 2,gamingTech Reviews,Technology

Kingston has released a successor to the original HyperX Cloud headset, appropriately named the HyperX Cloud II.

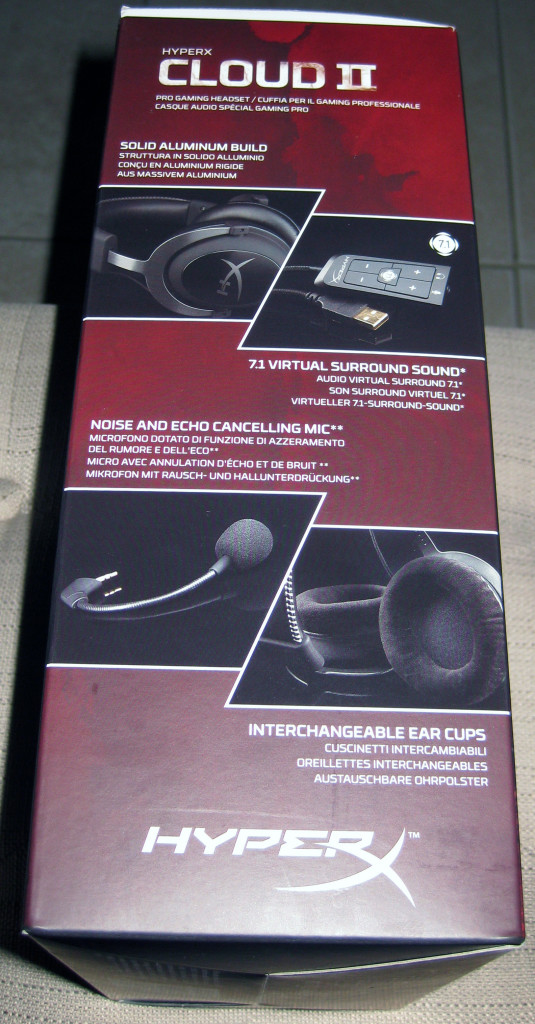

The Cloud II released on February 9th 2015, in two color variants – gun metal and red (referring to the parts of the headset that connect to the earcups. The headset itself is black in both cases). Our review model, pictured below, is the gun metal variant which also includes some white stitching that contrasts nicely with the black of the headset and the gun metal aluminum parts. Also available for the Cloud II is a limited edition pink (which also refers to the earcup holders, the headset in this case is actually white).

The specifications of the new HyperX Cloud II headset are as follows:

Headset:

- Transducer Type: dynamic Ø 53mm

- Frequency Response: 15Hz–25,000 Hz

- Nominal SPL: 98±3dB

- Operating principle: closed

- Nominal impedance: 60 Ω per system

- H.D.: < 2%

- Power handling capacity: 150mW

- Sound coupling to the ear: circumaural

- Ambient noise attenuation: approx. 20 dBa

- Headband pressure: 5N

- Weight with microphone and cable: 320g

- Cable length and type: 1m + 2m extension

- Connection: single mini stereo jack plug (3.5 mm)

Microphone:

- Transducer Type: condenser (back electret)

- Polar Pattern: cardioid

- Frequency Response: 50-18,000 Hz

- Operating principle: pressure gradient

- Power supply: AB powering

- Supply voltage: 2V

- Current consumption: max 0.5 mA

- Nominal impedance: ≤2.2 kΩ

- Open circuit voltage: at f = 1 kHz: 20 mV / Pa

- THD: 2% at f = 1 kHz

- SPL: 105dB SPL (THD≤1.0% at 1 KHz)

- Microphone output: -39±3dB

- Length mic boom: 150mm (include gooseneck)

- Capsule diameter: Ø6*5 mm

- Connection: single mini stereo jack plug (3.5mm)

The headset comes in impressive packaging, and the box is of very high quality that can be kept for repeated use. Additionally, the Cloud II comes with a pouch to store the headset, cabling, and microphone attachment for convenience while travelling. The headset also includes a dual port adapter meant for use on airplanes, which is a nice feature to have.

The Cloud II headset itself is high in quality, and the metal part of the headset that connects the earcups to the band does a lot to enhance that feeling. It is very sturdy and fits well on the head.

The earcups in particular are very comfortable, with a leather-ish exterior and cushion that feels similar to memory foam. Kingston also includes alternate velvet-like earcups for people who prefer a different feel, which is also quite comfortable.

Even during extended use, the headset maintains its comfort. Personally, my experience with headsets is that they tend to become uncomfortable after wearing them for hours at a time, but this was not the case with the Cloud II. The headset is also well ventilated and did not cause sweat to build up during my usage.

Since the HyperX Cloud II is marketed as a gaming headset, it needs to provide solid audio quality, especially when it comes to mids and highs. Fortunately, the headset certainly delivers with crisp highs, clear mids, and even delivers tight lows, without any perceived distortion along the entire range. The variety of listening material tested included orchestra music, action movie sequences, and conference calls. While the headset is not comparable to active-noise cancelling headphones, it performed well at keeping out extraneous sounds and would thrive in a LAN environment.

The Total Harmonic Distortion, or THD, of the headset is rated at a respectable < 2% but its performance seemed better than some competitors who claim < 1%. This could possibly be due to the design of the overall headset and the pressure created by the loop, which ensures an effective noise isolation barrier from the external environment.

The Cloud II's microphone also provides excellent sound output, to the point that other participants in conference calls commented on the clarity of my voice. It also includes a windscreen which effectively filters out breathing sounds as well.

The headset also comes with a USB soundcard that includes a 7.1 Surround Sound option that can be enabled/disabled with the press of a single button. It also provides volume controls for both the audio and the microphone, and has a built in clip which allows for convenient access during gaming sessions.

With 7.1 Surround enabled, the audio appears to boost the mid-range slightly while also echoing it slightly out of phase to make the audio sound as though it is filling up the room around you. This is opposed to having the 7.1 Surround disabled, which causes the audio to sound as though it is emanating directly into your ears (which it is).

While listening to music, the 7.1 Surround enhanced orchestral music as it gave it a sense of presence, but for other music types I preferred to listen with 7.1 disabled. However, for both movies and games, the 7.1 Surround provided a really nice audio effect, making the action sound as though it was all around you.

It should be noted that the 7.1 Surround option is only available when plugged into the soundcard, requiring the use of USB as an input. If you want to use the 3.5mm connectors, you’ll have to forgo the soundcard.

A couple small complaints I have are that the microphone is detachable and the microphone cover is not attached to the headset. Since both the microphone and microphone cover are detachable, these pieces could be lost if it is not cared for properly. This is especially true for the cover, which could be easily misplaced due to its smaller size. Losing the microphone cover would be unfortunate, as it is required to prevent dust from getting into the headset when the microphone is unplugged. While having the detachable microphone provides the advantage of being able to plug any 3.5mm microphone into the headset, I would rather forgo this option in order to have a permanently attached retractable microphone.

At an MSRP of $99, the Kingston HyperX Cloud II headset provides a high quality headset at a good value. It boasts great audio quality, a clear sounding microphone, is very comfortable to wear, and feels durable and well built. The 7.1 Surround Sound USB card adds another level of customization, and the add ons and storage pouch that come with the headset make it an even greater purchase.

Digital Ops: Let's Play Episode 1 - Battlefield Hardline Beta

February 15, 2015hardline beta,digital,ops,battlefield,not,let's play,beta,notoperator,battlefield hardline beta,digital ops,notop,digitalops,hardline,operator,episode 1,battlefield hardline,not operatorDigital Ops,Gaming

In the first episode of Digital Ops, Not Operator's Let's Play series, Ryan and Michael take a look at the three gametypes included in the Battlefield Hardline Beta: Heist, Hotwire, and Conquest.

Stay tuned for our upcoming discussion of the Battlefield Hardline Beta as well.

Review: Digital Storm Bolt II

January 28, 2015gaming pc,digital,intel,small form factor,digital storm,review,mini,form,bolt,nvidia,miniitx,bolt 2,gpu,itx,sff,storm,cpu,small,ds,pc,factor,bolt ii,amd,mini itxTechnology,Tech Reviews

Digital Storm is a company well known for pushing the limits of high-end desktops. While they have a variety of different models, only two fit into the 'slim' Small Form Factor (SFF) category. Whereas the Eclipse is the entry-level slim SFF PC designed to appeal to those working with smaller budgets, the Bolt is the other offering in the category, and aims much higher than its entry-level counterpart.

I had the opportunity to review the original Bolt when I still wrote for BSN, and I loved it, though there were a few design decisions I wanted to see changed.

Today I'm taking a look at the Digital Storm Bolt II. The successor to the original Bolt, it has undergone a variety of changes when compared to its predecessor. Two of the most common complaints with the original were addressed; the Bolt II now supports liquid cooling for the CPU (including 240mm liquid coolers), and the chassis has been redesigned to have a more traditional two-panel layout (as opposed to the shell design of the original).

Specifications:

Specifications:

The Bolt II comes in four base configurations that can be customized further:

Level 1 (Starting at $1674):

- Base Specs:

- Intel Core i5 4590 CPU

- 8GB 1600MHz Memory

- NVIDIA GTX 760 2GB

- 120GB Samsung 840 EVO SSD

- 1TB 7200RPM Storage HDD

- ASUS H97 Motherboard

- 500W Digital Storm PSU

Level 2 (Starting at $1901):

- Base Specs:

- Intel Core i5 4690K CPU

- 8GB 1600MHz Memory

- NVIDIA GTX 970 4GB

- 120GB Samsung 840 EVO SSD

- 1TB 7200RPM Storage HDD

- ASUS H97 Motherboard

- 500W Digital Storm PSU

Level 3 (Starting at $2569):

- Base Specs:

- Intel Core i7 4790K CPU

- 16GB 1600MHz Memory

- NVIDIA GTX 980 4GB

- 120GB Samsung 840 EVO SSD

- 1TB 7200RPM Storage HDD

- ASUS Z97 Motherboard

- 500W Digital Storm PSU

Battlebox Edition (Starting at $4098):

- Base Specs:

- Intel Core i7 4790K CPU

- 16GB 1600MHz Memory

- NVIDIA GTX TITAN Z 6GB

- 250GB Samsung 840 EVO SSD

- 1TB 7200RPM Storage HDD

- ASUS Z97 Motherboard

- 700W Digital Storm PSU

Note, the system I received for review came out prior to the release of the Nvidia GTX 900 series cards. These are the specifications for that system:

Reviewed System:

- Intel Core i7 4790K CPU (Overclocked to 4.6 GHz)

- 16GB DDR3 1866MHz Corsair Vengeance Pro Memory

- NVIDIA GTX 780Ti

- 500GB Samsung 840 EVO SSD

- 2TB 7200RPM Western Digital Black Edition HDD

- ASUS Z97I-Plus Mini ITX Motherboard

- 500W Digital Storm PSU

- Blu-Ray Player/DVD Burner Slim Slot Loading Edition

This system is pretty impressive considering how small it is. The Bolt II measures 4.4"(W) x 16.4"(H) x 14.1"(L), or for our readers outside of the US, approximately 11cm(W) x 42cm(H) x 36cm(L).

Design and Software

Design and Software

The Bolt II ships with Windows 8.1, but also comes with Steam preinstalled with Big Picture mode enabled, due to the fact that the Bolt II was originally slated to be a hybrid Steam Machine (Windows and Steam OS dual boot). Since Steam Machines had yet to materialize, the Bolt II shipped as a gaming PC designed for the living room, including feet attached to the side panel so the system can sit on its side.

However, this design decision also makes sense of one of my largest annoyances with the computer; the front panel I/O ports are all on the right side of the chassis, near the front, at the bottom. If the system is stood up as one would typically have a desktop, the ports are moderately inconvenient to access. Conversely, if the system is placed on its side in a living room setting (such as under a television), the ports are perfectly accessible.

However, this design decision also makes sense of one of my largest annoyances with the computer; the front panel I/O ports are all on the right side of the chassis, near the front, at the bottom. If the system is stood up as one would typically have a desktop, the ports are moderately inconvenient to access. Conversely, if the system is placed on its side in a living room setting (such as under a television), the ports are perfectly accessible.

Digital Storm mentioned to us that once Steam Machines are ready to roll, they'll be shipping the Bolt II as it was originally intended: as a hybrid Steam Machine. Luckily for us, Steam Machines are coming out this March, so expect to see some hybrid systems from Digital Storm soon.

Digital Storm mentioned to us that once Steam Machines are ready to roll, they'll be shipping the Bolt II as it was originally intended: as a hybrid Steam Machine. Luckily for us, Steam Machines are coming out this March, so expect to see some hybrid systems from Digital Storm soon.

One of the joys of a boutique system is a comforting lack of bloatware, as the Bolt II comes with very little preinstalled. The only software installed on the system was the system drivers, Steam (as previously mentioned), and the Digital Storm Control Center. Here is what the desktop looked like upon initial boot:

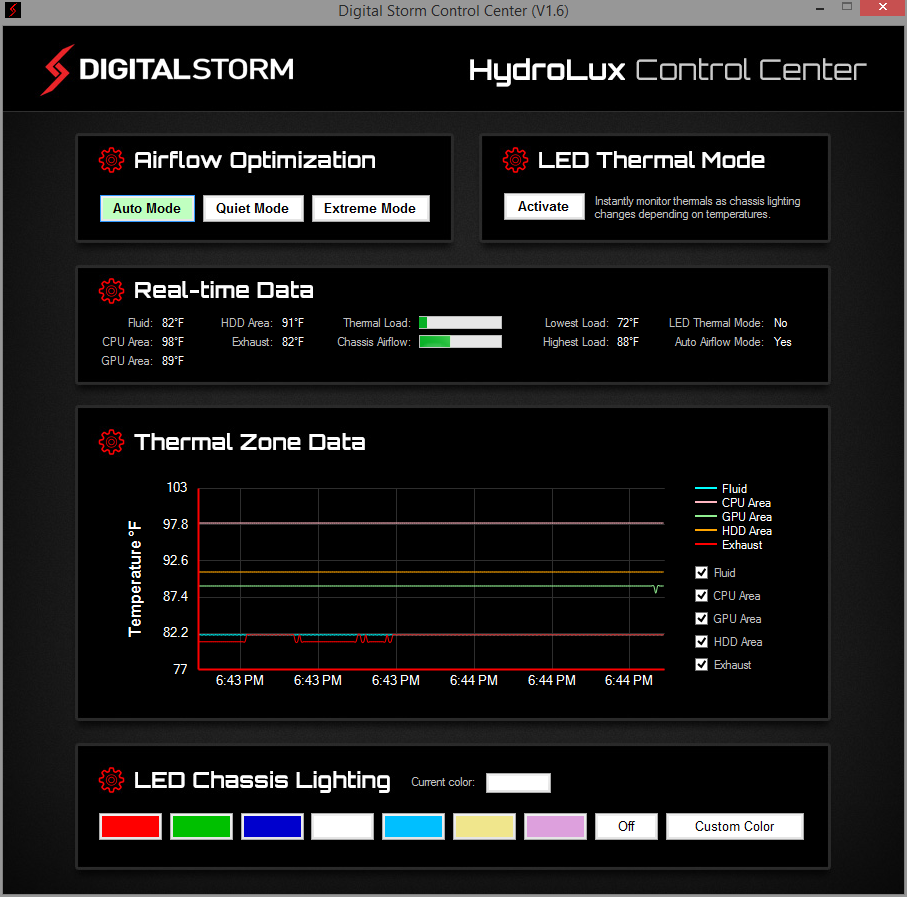

The Digital Storm Control Center is a custom piece of software for Digital Storm PCs designed to manage a system's cooling and lighting and provide metrics for the user. Much to my surprise, the software is designed and coded in-house, which explains the level of polish and quality the program possesses (as opposed to other companies that rely on 3rd party developers located in Asia with software solutions that often prove underwhelming).

The Digital Storm Control Center is a custom piece of software for Digital Storm PCs designed to manage a system's cooling and lighting and provide metrics for the user. Much to my surprise, the software is designed and coded in-house, which explains the level of polish and quality the program possesses (as opposed to other companies that rely on 3rd party developers located in Asia with software solutions that often prove underwhelming).

Synthetic Benchmarks

Synthetic Benchmarks

Moving on to benchmarks, I'll start with the synthetics:

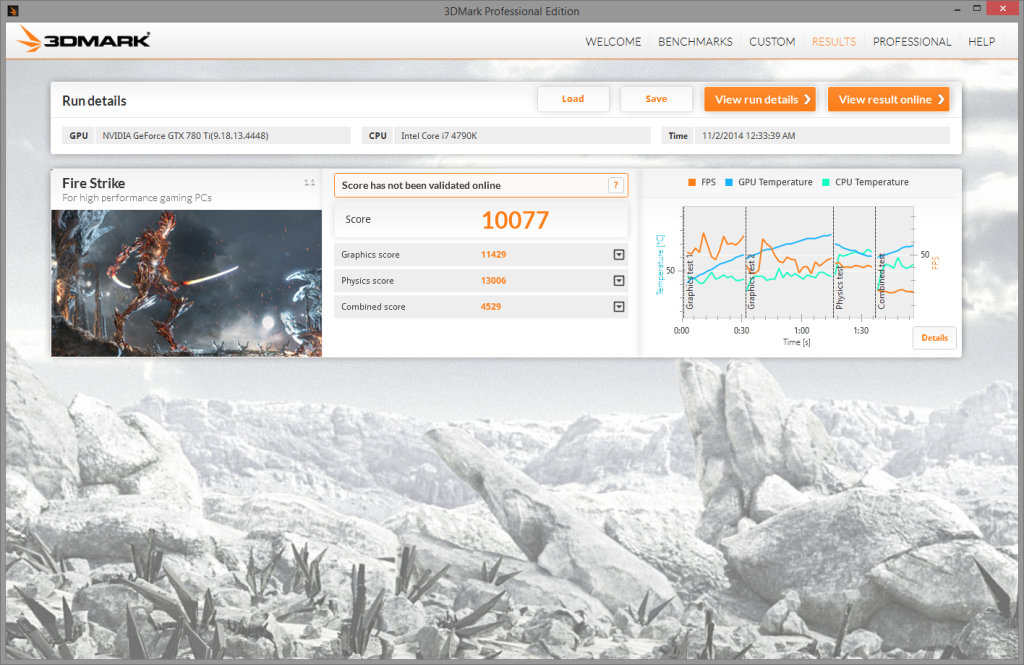

Starting with 3DMark's Fire Strike, the Bolt II managed a score of 10077.

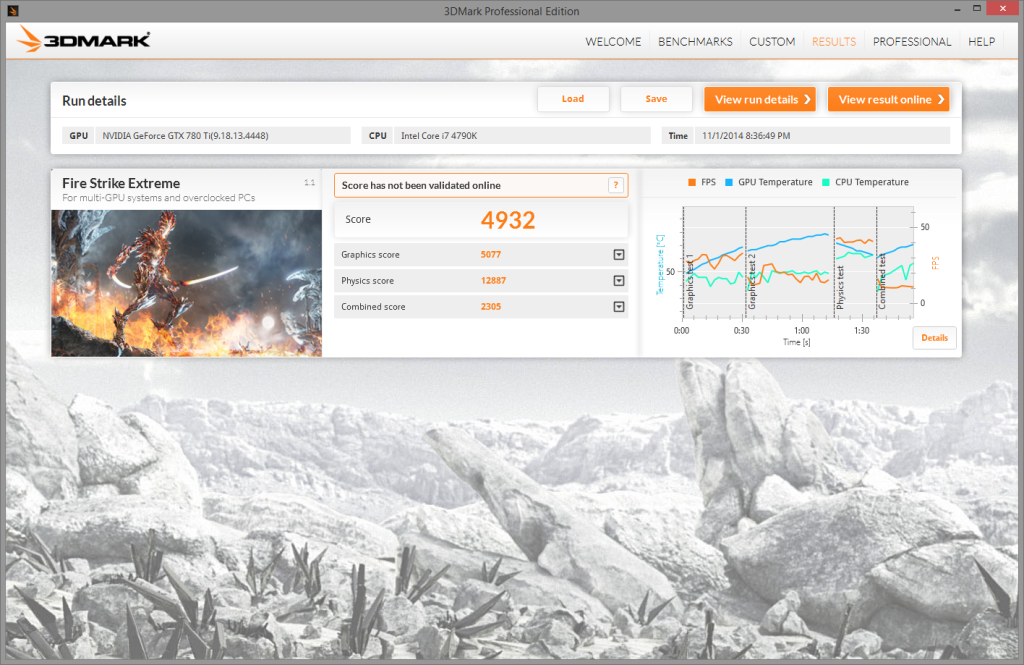

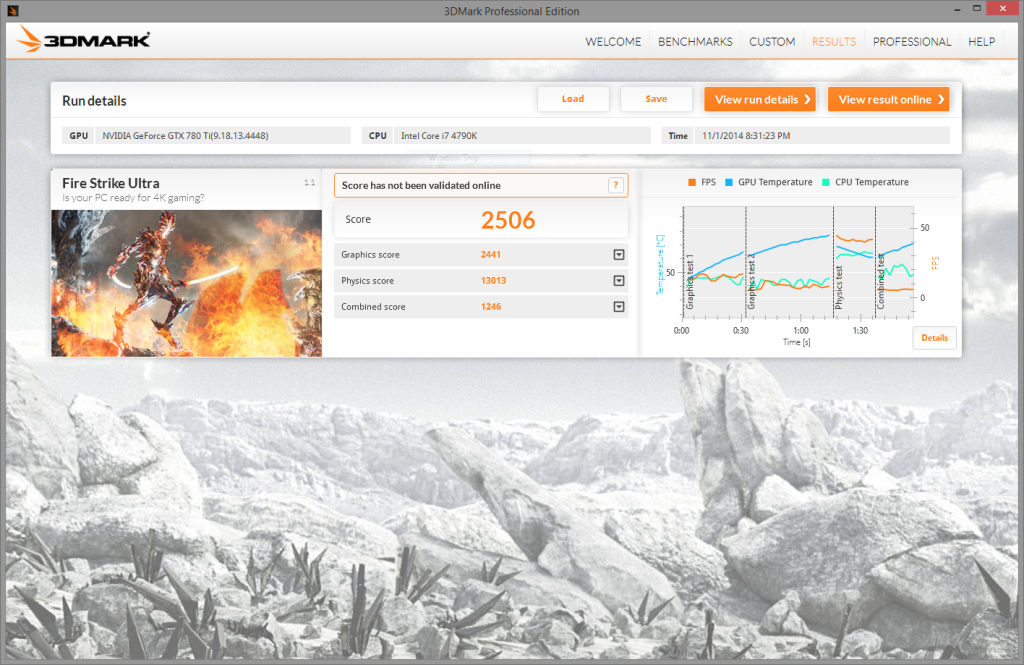

Running 3DMark's Fire Strike in Extreme mode, the system scores 4932.

Rounding out 3DMark's Fire Strike is Ultra mode, with the Bolt II getting a score of 2506.

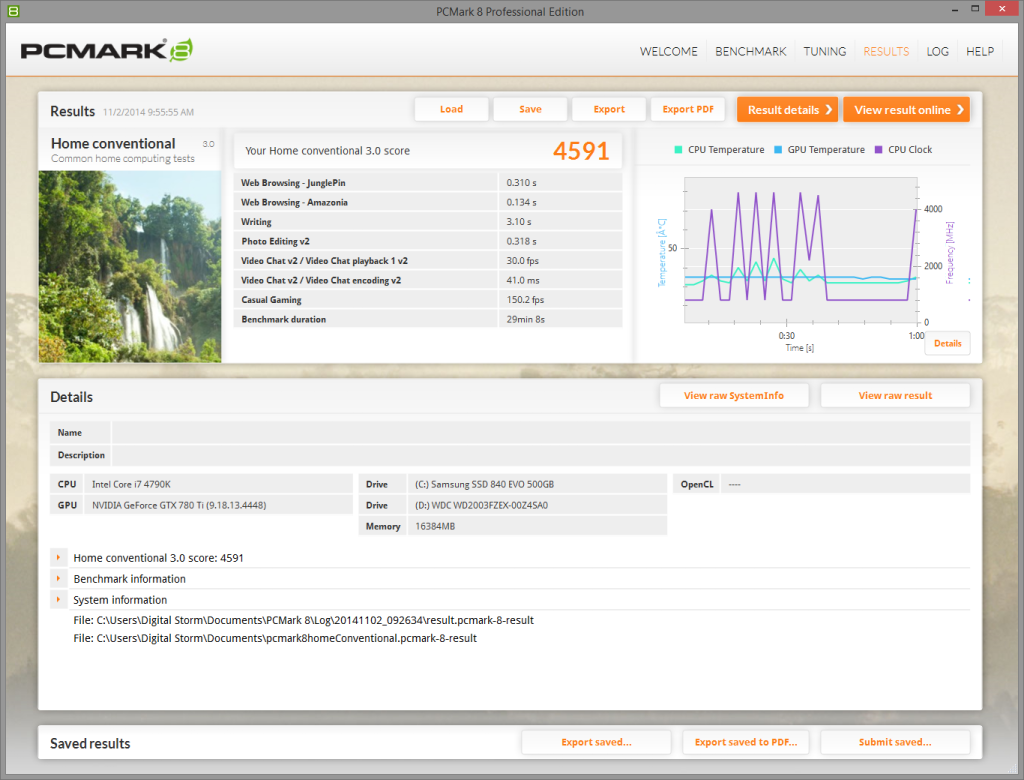

In PCMark 8, the Bolt II scores a 4591 when running the Home benchmark.

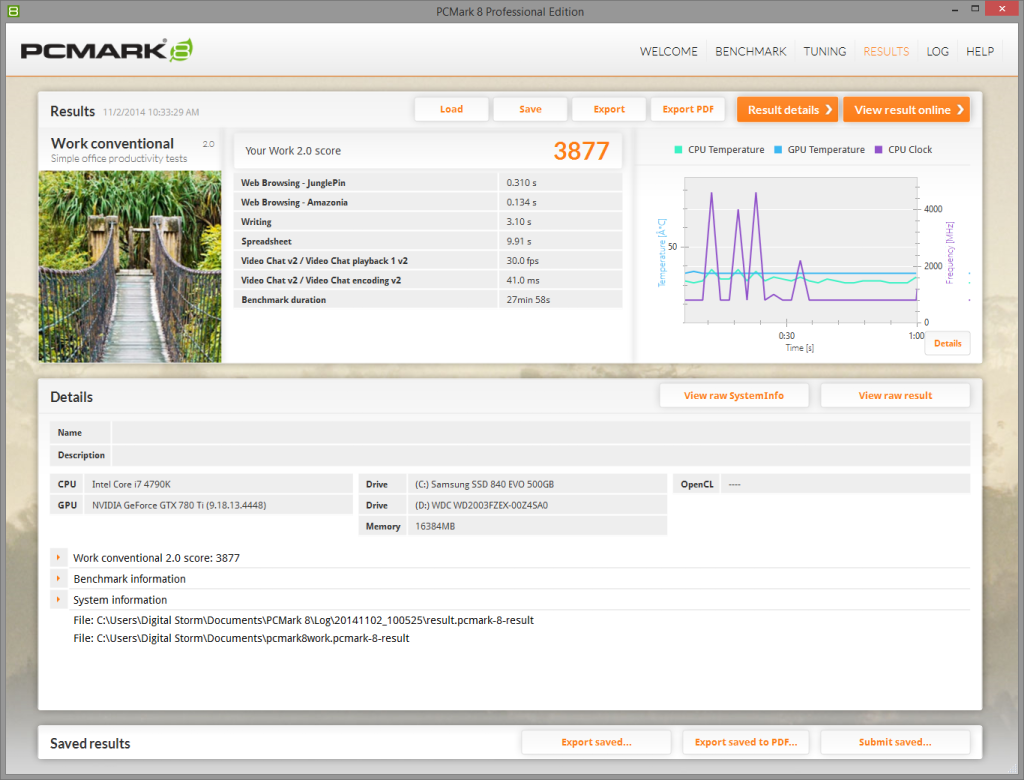

The other benchmark in PCMark 8 I ran was the Work benchmark, which had the Bolt II scoring 3877.

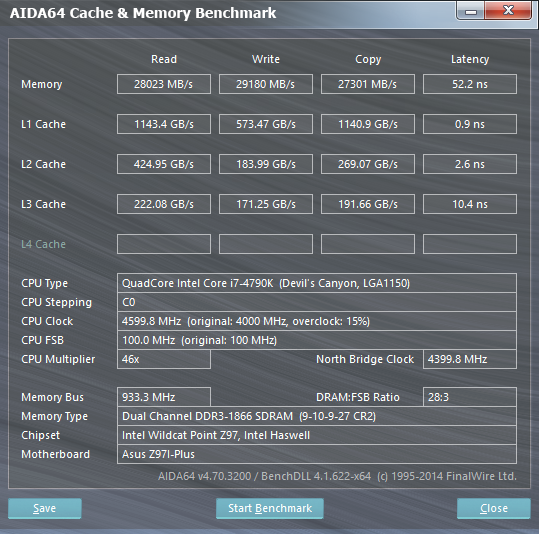

Now to cover AIDA64's sets of benchmarks:

The AIDA64 Cache and Memory benchmark yielded a Read of 28023 MB/s, Write of 29180 MB/s, Copy of 27301 MB/s, and Latency of 52.2 ns on the Memory.

The system got a Read of 1143.4 GB/s, Write of 573.47 GB/s, Copy of 1140.9 GB/s, and Latency of 0.9 ns on the L1 Cache.

It scored a Read of 424.95 GB/s, Write of 183.99 GB/s, Copy of 269.07 GB/s, and Latency of 2.6 ns on the L2 Cache.

Finally, it produced a Read of 222.08 GB/s, Write of 171.25 GB/s, Copy of 191.66 GB/s, and Latency of 10.4 ns on the L3 Cache.

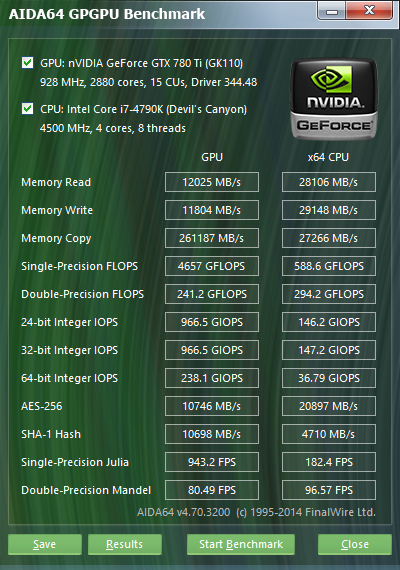

AIDA64's GPGPU Benchmark results are up next:

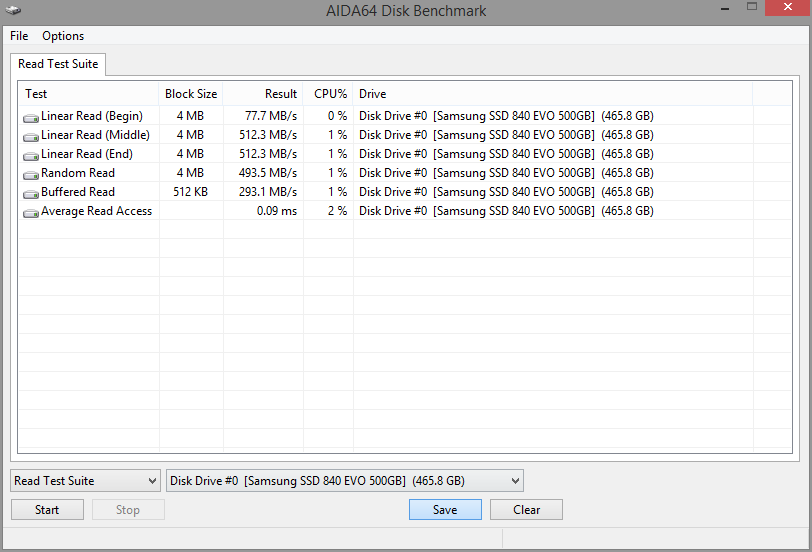

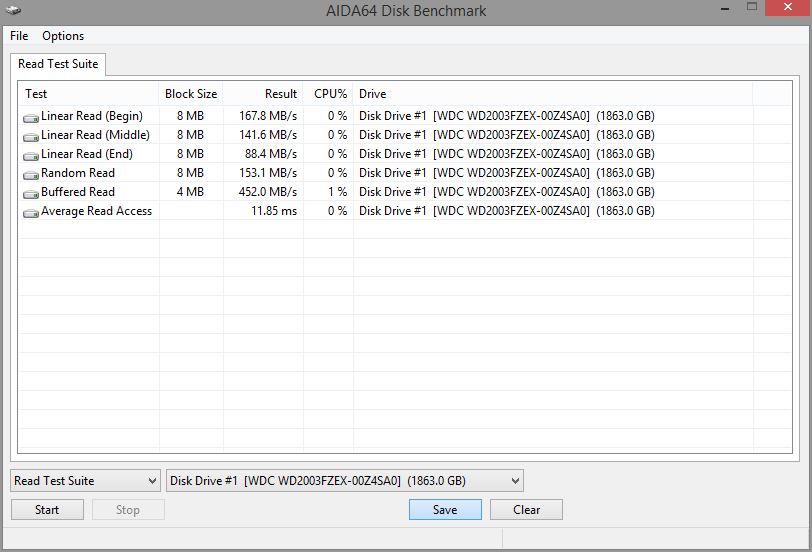

The AIDA64 Disk Benchmark was then run on both the Samsung 840 EVO SSD and the Western Digital 2 TB Black Edition HDD.

The Samsung 840 EVO SSD yielded an average Read of 378 MB/s across 5 different tests, and a latency of 0.09 ms.

The Western Digital 2 TB Black Edition HDD yielded an average Read of 201.5 MB/s across 5 different tests, and a latency of 11.85 ms.

Gaming Benchmarks

Moving on to the results of the gaming benchmarks, now that the synthetic benchmarks have been covered:

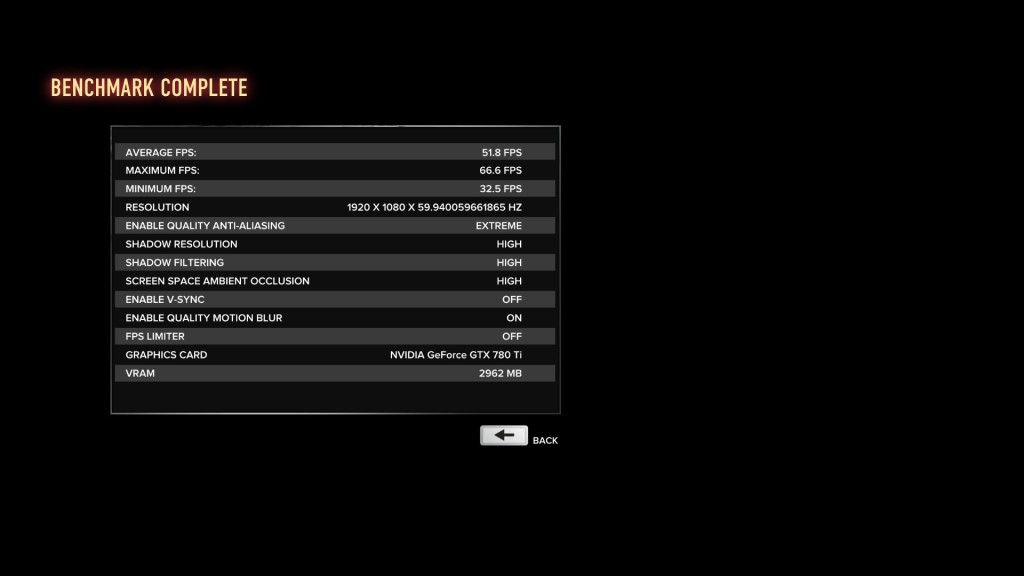

Starting off with Sleeping Dogs: Definitive Edition, the benchmark was run using the settings listed in the screenshot, and it scored an average FPS of 51.8, a maximum FPS of 66.6 FPS, and a minimum FPS of 32.5.

Even when maxing out the game settings at 1080p resolution, the system maintains very playable framerates, which would look even better when paired with a display that utilizes FreeSync or G-Sync. In this case it would have to be G-Sync since this Bolt II configuration utilizes an Nvidia GPU.

Next up is Far Cry 4. I replayed the liberation of Varshakot Fortress in Outpost Master. Rather than use Nvidia's GeForce Experience optimized settings, which should theoretically provide the best overall experience, I cranked the game settings to Ultra at 1920x1080 resolution.

The game was very playable and smooth, getting an average FPS of 82.6, a maximum FPS of 665 FPS, and a minimum FPS of 56.

Moving on, I tested out Assassin's Creed Unity, now that most of the bugs have been sorted out. I ran Unity at the Ultra High setting, 1920x1080 resolution, again ignoring Nvidia’s optimized settings in order to see how the game would run at the top preset. I played through the intro sequence of the game, and excluded cutscenes.

The game was certainly playable, and oftentimes smooth, though there were occasional framerate drops and a tiny bit of noticeable tearing. The game had an average FPS of 38, a maximum FPS of 53 FPS, and a minimum FPS of 19. This would have also gotten some benefit from a FreeSync or G-Sync enabled display.

Value and Conclusion

Clearly the Bolt II is a very capable system, and the fact that it's available in a variety of customizable configurations to better fit the needs and budget of the consumer is a plus (it can be configured as low as $1100, or as high as $7800 excluding any accessories). Though I was impressed with the original Bolt, the Bolt II is a clear improvement in every way.

Whether or not the cost of the Bolt II is a good value is something that has to be determined by the consumer. Would it be cheaper to build yourself a PC with identical performance? Absolutely, even when building with the same or similar components. However, there are a few things that make the Digital Storm Bolt II stand out from a home-built PC.

First is the form factor. The Bolt II uses a fully custom chassis designed by Digital Storm, so no one building a PC at home can build into a chassis with the same dimensions. Most mini-ITX systems won't be anywhere near as slim.

Second is the warranty and guarantees of build-quality and performance of the system, which you can only get by purchasing a PC from a reputable company with a solid customer service record.

Third is the convenience. The Bolt II comes prebuilt, with the OS and drivers installed, and can also include a stable overclock verified by Digital Storm, custom lighting and cooling, as well as cable management. All of these factors sure makes building your own seem like more pain than it’s worth.

When compared to other system builders with similar SFF systems, the Bolt II still comes out number one in terms of fit and finish, as well as build quality. The metal chassis and custom design are really impressive, and serve to differentiate the Bolt II from other systems in a way that the Digital Storm Eclipse doesn't (though that is the budget model of course).

If you were to ask me if I would build my own PC or purchase a Bolt II, my answer would probably still be to build my own system. However, this is simply due to personal preference; the form factor is not all that necessary for my current lifestyle, and I value the flexibility of choosing my own components and saving money more than the hassle of putting together the system myself. Despite all of this, were I to need a prebuilt PC with a small footprint, there's no doubt the Bolt II would be my first choice.

The First Not Operator Giveaway!Θ UPDATED

January 24, 2015tt,give away,brown,tt esports,kone,notop,keyboard,kone pure,gaming,cherry,thermaltake,giveaway,mx,esports,roccat,poseidon,pure,notoperator,mechanical,mouse,not operator,cherry mxMiscellaneous

Update 2: One of our winners didn't claim their prize, so we did a redraw and gave someone else the opportunity to claim it! Update 1 has been edited to reflect the new winner, be sure to get back to us within 48 hours to claim your prize.

Update 1: Congratulations to our two winners, Harrison G and Dean Y! Be sure to check your email's spam folders if you didn't receive an email from us, as you have 48 hours to claim your prize, otherwise we'll redraw another winner or two.

To the rest of you, thanks for entering, and stay tuned as we'll have other giveaways in the future.

Welcome to the first Not Operator Giveaway!Θ

We're giving away a Roccat Kone Pure laser gaming mouse as well as a Tt eSports (Thermaltake) Poseidon mechanical gaming keyboard (with Cherry MX Brown key switches).

The specications for the Roccat Kone Pure are:

- Pro-Aim Laser Sensor R3 with up to 8200dpi

- 1000Hz polling rate

- 1ms response time

- 12000fps, 10.8megapixel

- 30G acceleration

- 3.8m/s (150ips)

- 16-bit data channel

- 1-5mm Lift off distance

- Tracking & Distance Control Unit

- 72MHz Turbo Core V2 32-bit Arm based MCU

- 576kB onboard memory

- Zero angle snapping/prediction

- 1.8m braided USB cable

Dimensions – Max. width 7cm x approx. 12cm max. length

Weight – Approx. 90g (excl. cable)

In the package – ROCCAT™ Kone Pure - Core Performance Gaming Mouse, Quick-Installation Guide

The Roccat Kone Pure has an MSRP of $69.99

The the Tt eSports Poseidon mechanical gaming keyboard features Cherry MX Brown key switches, a fully back-lit keyboard, offering 4 levels of adjustable brightness, media keys, and a Windows Key lock option. It has an MSRP of $99.99

To enter our giveaway just follow us on Twitter @notoperating, tweet about our giveaway, and like us on Facebook. In addition, feel free to subscribe to our Youtube channel for an extra chance to win.

The giveaway will run from 1/25/2014 at 12:00 AM PST until 2/1/2014 at 12:00 AM PST, at which point we'll choose two winners, one of which will receive the mouse, and the other the keyboard.

Unfortunately the giveaway is only available to readers located in the contiguous United States. Good luck to you all!

!Θ

Evolve – The Next Evolution of Multiplayer?

January 24, 20152k games,xbone,xbox one,notoperator,turtle rock,gaming,2k,not operator,ps4,xbox,notop,game,evolve,games,turtle rock studios,pcGaming,Gaming Analysis

I remember the first time I played Evolve. At Comic Con last year, I braved the cosplaying hordes and made my way over to the Manchester Grand Hyatt to check out the Xbox Lounge and play some of the exclusive game demos within. When I walked into the Lounge, my attention immediately focused in on a large poster in the corner of the ballroom; a monstrous mark in the mud eerily reminiscent of the infamous T-Rex footprint from Jurassic Park, with just the phrase “EVOLVE” displayed above. I sped my way over to the demo area where I was greeted by a friendly team of the game’s developers, Turtle Rock Studios: “Do you want to play as the Monster?” I smirked, grabbed the controller, and proceeded to mercilessly eviscerate all four of the human-controlled hunters as the Cthulhu-like creature known only as Kraken. Sadly, during the time I’ve spent playing Evolve in the Big Alpha and open beta, and due the recent controversy over the game’s downloadable content, nothing has come close to matching my excitement since that first match back at the Xbox Lounge.

Evolve pits a team of four hunters, each with their own unique skills and weapons, up against one player-controlled monster in “asymmetrical multiplayer” battles. This means that the different teams, the hunters versus the monster in this case, have vastly different abilities at their disposal.

Player Types

The hunters are comprised of four classes, Assault, Support, Trapper, and Medic, and each have four unique skills to use, including a firearm. Monsters, which also have four distinctive powers, kill and eat AI wildlife in order to gain extra armor and eventually evolve, which endows the creature with more health and increases the damage of their abilities. Evolve was flawlessly described as “an intense game of Cat and Mouse where after enough cheese, the mouse can eat the cat.” This is Evolve at its core.

Environment

When the game launches in February it will have four multiplayer modes that span twelve maps. The maps add some diversity to the gameplay by throwing environmental hazards at the players in the form of both passive and aggressive AI wildlife, carnivorous plants that will swallow hunters whole, and more. Essentially, these threats were designed to add another element for the players to worry about on top of the hunter-monster battle. However, once you have played the maps and learned what and where these hazards are, as well as how to avoid them, that element of unpredictability is completely removed from the game. This just returns the players to the basic find the monster/kill the hunters gameplay, and the environmental threats become more of an annoyance than something that adds value to the game.

Game Modes

The four multiplayer gametypes are:

- Hunt – The basic ‘hunters versus the monster’ mode

- Rescue – The hunters have to revive and escort five AI human survivors to evacuation sites before the monster can kill five survivors

- Nest – The hunters have to destroy six monster eggs spread throughout the map within ten minutes, and the monster can hatch eggs to create monster minions to fight with

- Defend – A MOBA-like mode where a fully evolved monster and waves of monster minions attempt to destroy two generators before finally attacking a ship full of survivors.

It is important to note that despite the game modes having different objectives, they can all be ended immediately if all four hunters are killed by the monster, or if the monster is killed by the hunters. From a gameplay perspective that decision makes sense, but it detracts from the value of playing modes other than Hunt, due to the fact that every other game type is basically just another Hunt match with an added objective. There has been no confirmation from Turtle Rock that they are working on new gameplay modes for Evolve, which could really hurt the replay value and cause gamers to get bored of the game quicker than anticipated.

In addition to the four modes listed above is Evacuation, Evolve’s self-proclaimed “dynamic campaign.” However, aside from an introductory trailer that scratches together the surface of a story for the game, Evacuation simply strings together the four basic game modes over five multiplayer matches called “days,” culminating in a final round of Defend. The twist to Evacuation is that the winning side of each match gets some sort of advantage for the next round. For example, if the hunters win, in the very next match there may be some auto-turrets that attack the monster, and if the monster wins, there could be a large poisonous gas cloud that damages hunters when they are close to it. According to Turtle Rock, there are over 800,000 possible scenarios created by these advantages. However, the advantages merely result in another aspect to keep an eye out for in a match, not unlike the environmental hazards mentioned earlier. Even more disappointing here is that Turtle Rock blanketed one brief trailer over the four multiplayer modes already in the game, added some more hazards on top of the preexisting ones, and decided that was good enough to comprise Evacuation’s “dynamic campaign.” Calling it so is extremely misleading to the average customer, especially if they are on the fence deciding whether or not to pick up Evolve, notice the words “dynamic campaign” written somewhere on the back of the box, and buy the game thinking it has some sort of story mode. It does not.

Downloadable Content

Evolve will ship with twelve maps, twelve hunters (three characters per class), and three monsters. Turtle Rock recently announced that the fourth monster, Behemoth, will be available for download in the spring for free if you pre-order the game. Conversely, if you do not pre-order Evolve and use the downloadable Behemoth code before midnight on the game’s release date, the only way to acquire Behemoth will be through a $14.99 purchase.

Additionally, if you have any interest in acquiring new hunters down that road, the Hunting Season Pass offers four new hunters and three monster skins that will set you back $24.99, but would retail at around $30 if all items within sold individually. Compare these prices to Destiny, for example, which sold its first “expansion,” The Dark Below, for $19.99. The price appeared to be pretty steep for just a handful of new story missions, a few Strikes, and one Raid, but it is quickly starting to look like a better deal compared to what’s happening with Evolve. Keep in mind, the Hunting Season Pass and Behemoth DLC only cover the first wave of downloadable characters. Any other hunters or monsters released down the road are going to cost you even more.

At this price point, Turtle Rock is effectively equating the value of one monster to a quarter of the total value of the game, and four hunters at approximately one-half. Now, I won’t pretend to understand all of the intricacies that go into creating new monsters and hunters. I am sure “it takes a lot of time, iteration and careful balancing”, and these prices are an attempt to reflect that. As a gamer who, despite what developers and publishers may think, is not made of money, it is disheartening knowing that before I even throw down the $60 to buy the game, there are all of these new hunters and monsters on the horizon. Together they’re expensive enough that I would be paying more than another full priced copy of the game if I want the chance to play all of the new hunters and monsters.

For an even better deal, Evolve is selling a Digital Deluxe version, combining the regular Evolve game with the Hunting Season Pass for just $80, a whopping $5 discount when compared to buying the game and Hunting Season Pass separately. Better yet, if you have a quality gaming PC, and are a baller, you can purchase the “PC Monster Race” edition of the game for $100, which gets you: the game, the Hunting Season Pass, Behemoth, the yet to be announced fifth monster, and two more hunters. Now if all of these different DLC’s and season passes seem like a lot to keep track of for game that has yet to be released, you are not alone. When the Creative Director of your game has to come out to publicly defend the DLC strategy, you might want to start rethinking how you got to this point. But hey, at least Turtle Rock is giving away any additional maps they crank out for free!

Online Play

Despite all of these flaws, the game really shines and is genuinely fun when there is a fully coordinated team of hunters playing together against an intelligent monster. It is a beautiful sight when every class is performing their role perfectly; the Trapper has the monster imprisoned within an impenetrable dome and is keeping it pinned to the ground with harpoons, Assault is blasting away at the monster and using their temporary invulnerability shield when they start to take damage, Support is cloaking nearby teammates raining down orbital strikes, and the Medic is doing their best to keep everyone’s health up. The downside to this is that you’ll need a solid group of four friends who understand their roles and communicate with each other. At least through my experiences in the alpha and beta, the odds of playing random games with competent hunters were minimal. Hopefully this will change after the game is released, because going into a match as a hunter without any friends or a coordinated effort can get tiresome fast. Even playing as the monster against a team of hunters who have no idea what they are doing does not really yield any satisfaction when you effortlessly rip them apart.

Offline Play

Also worth mentioning is that the game will come with an offline mode where the player will be able to play the game with bots in lieu of real people. Personally, I feel that playing solely against AI opponents would take even more unpredictability out of the game, but I can also see how playing against the computer could alleviate the concerns of some casual gamers who either do not like or are having trouble competing online.

Conclusion

I want Evolve to do well, do not get me wrong. I would love nothing more than to see the game sell tons of copies, as I have faith in gamers to support new IPs with exciting ideas rather than those just rehashing the same formula in order to hit that next annual release. However, I just wish that the game would have shipped with more value to the customer to help rid the bad taste from our mouths due to the DLC strategy (which I would like to believe was pushed on Turtle Rock by their publisher, 2K Games). Evolve could easily be the recipient of a large sales boost solely from the fact that there are not any AAA multi-platform games being released in the near future other than Dying Light (depending on your definition of AAA), which comes out on January 27th, and Battlefield Hardline on March 17th. Perhaps there will be enough content and variety in the game to make it sustainable and have an active community for the foreseeable future. If so, hopefully we will get to see an Evolve 2 that has learned from the lessons of the past. Until then, happy huntin’.

Evolve stomps into stores on February 10th, on PC, PS4, and Xbox One.

G-Sync vs FreeSync: The Future of Monitors

January 16, 2015displayport,freesync,vblank,vesa,adaptive-sync,gsync vs freesync,sync,amd,monitor,v-sync,g-sync,refresh,display port,adaptivesync,graphics card,g-sync vs freesync,adaptive,nvidia,gpu,vsync,gsync,displayTech Analysis,Technology

For those of you interested in monitors, PC gaming, or watching movies/shows on your PC, there are a couple recent technologies you should be familiar with: Nvidia’s G-Sync and AMD’s FreeSync.

Basics of a display

Most modern monitors run at 30 Hz, 60 Hz, 120 Hz, or 144 Hz depending on the display. The most common frequency is 60 Hz (meaning the monitor refreshes the image on the display 60 times per second). This is due to the fact that old televisions were designed to run at 60 Hz since 60 Hz was the frequency of the alternating current for power in the US. Matching the refresh rate to the power helped prevent rolling bars on the screen (known as intermodulation).

What the eye can perceive

It is a common myth that 60 Hz was chosen because that’s the limit of what the human eye can detect. In truth, when monitors for PCs were created, they made them 60 Hz, just like televisions; mostly because there was no reason to change it. The human brain and eye can interpret images of up to approximately 1000 images per second, and most people can identify a framerate reliably up to approximately 150 FPS (frames per second). Movies tend to run at 24 FPS (the first movie to be filmed at 48 FPS was The Hobbit: An Unexpected Journey, which got mixed reactions by audiences, some of whom felt the high framerate made the movie appear too lifelike).

The important thing to note is that while humans can detect framerates much higher than what monitors can display, there are diminishing returns on increasing the framerate. For video to look smooth, it really only needs to run at a consistent 24+ FPS, however, the higher the framerate, the clearer the movements onscreen. This is why gamers prefer games to run at 60 FPS or higher, which provides a very noticeable difference when compared to games running at 30 FPS (which was a standard for the previous generation of console games).

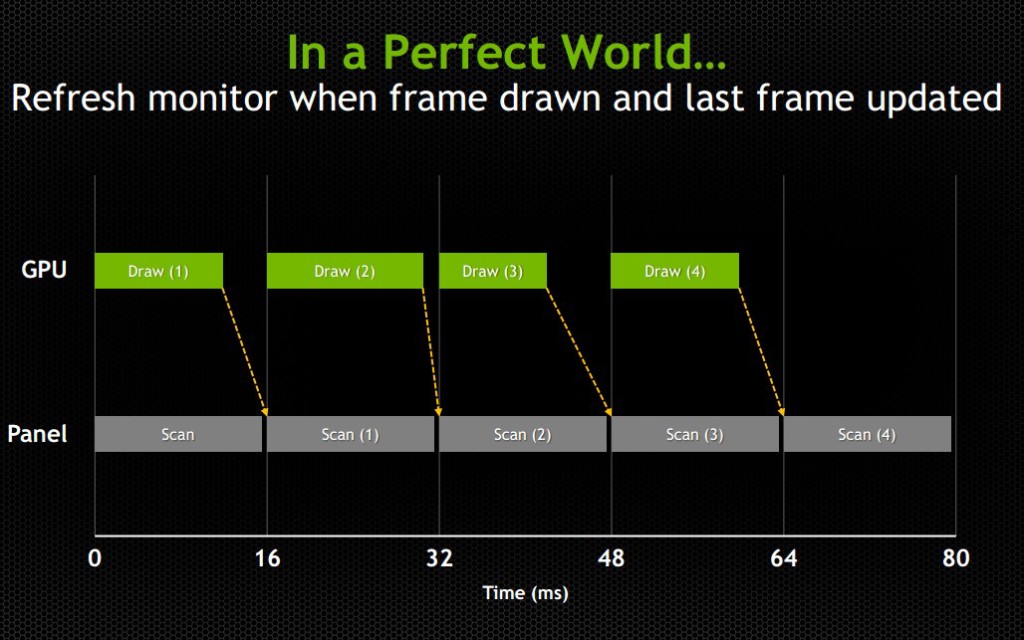

How graphics cards and monitors interact

An issue with most games is that while a display will refresh 60 times per second (60 Hz) or 120 or 144 or whatever the display can handle, the images being sent from the graphics card (GPU) to the display will not necessarily come at the same rate or frequency. Even if the GPU sends 60 new frames in 1 second, and the display refreshes 60 times in that second, it’s possible that the time variance between each frame rendered by the GPU will be wildly different.

Explaining frame stutter

This is important, because while the display will refresh the image every 1/60th of a second, the time delay between one frame and another being rendered by the GPU can and will vary.

Consider the following example: The running framerate of a game is 60 FPS, considered a good number. However, the time delay between half of the frames is 1/30th of a second and the other half has a delay of 1/120th of a second. In the case of it being 1/30th of a second, the monitor will have refreshed twice in that amount of time, having shown the previous image twice prior to receiving the new one. In the case of it being 1/120th of a second, the second image will have been rendered by the GPU prior to the display refreshing, so that image will never even make it to the screen. This introduces an issue known as micro-stuttering and is clearly visible to the eye.

This means that even though the game is being rendered at 60 frames per second, the frame rendering time varies and ends up not looking smooth. Micro-stuttering can also be caused by systems that utilize multiple GPUs. When frames rendered by each GPU don’t match up in regards to timing, it causes delays as described above, which produces a micro-stuttering effect as well.

Explaining frame lag

An even more common issue is that framerates rendered by a GPU can drop below 30 FPS when something is computationally complex to render, making whatever is being rendered look like a slideshow. Since not every frame is equally simple or complex to render, framerates will vary based on the frame. This means that even if a game is getting an average framerate of 30 FPS, it could be getting 40 FPS for half the time you’re playing and 20 FPS for the other half. This is similar to the frame time variance discussed in the paragraph above, but rather than appear as micro-stutter, it will make half of your playing time miserable, since people enjoy video games as opposed to slideshow games.

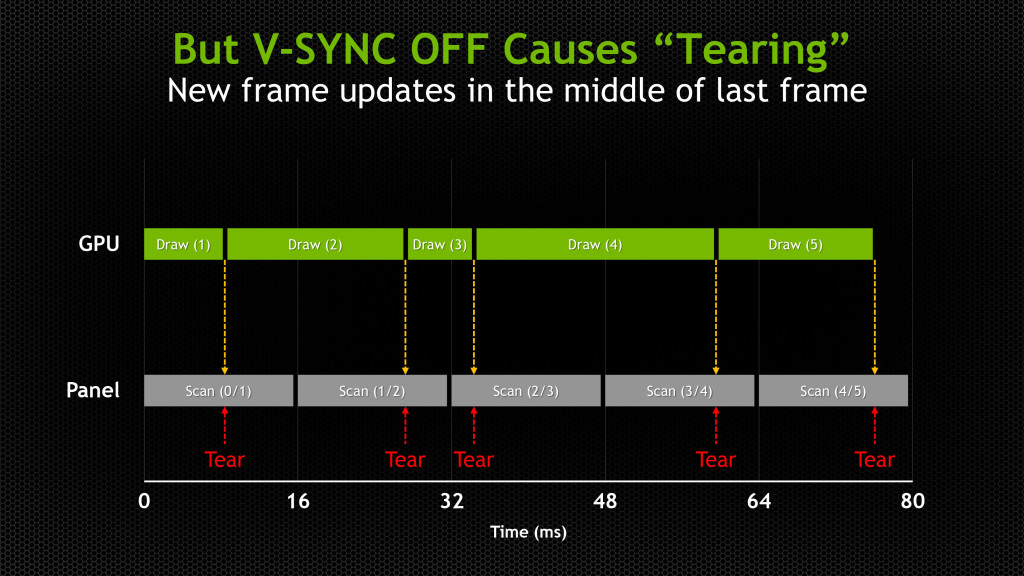

Explaining tearing

Probably the most pervasive issue though is screen tearing. Screen tearing occurs when the GPU is partway through rendering another frame, but the display goes ahead and refreshes anyways. This makes it so that part of the screen is showing the previous frame, and part of it is showing the next frame, usually split horizontally across the screen.

These issues have been recognized by the PC gaming industry for a while, so unsurprisingly some solutions have been attempted. The most well-known and possibly oldest solution is called V-Sync (Vertical Sync), which was designed mostly to deal with screen tearing.

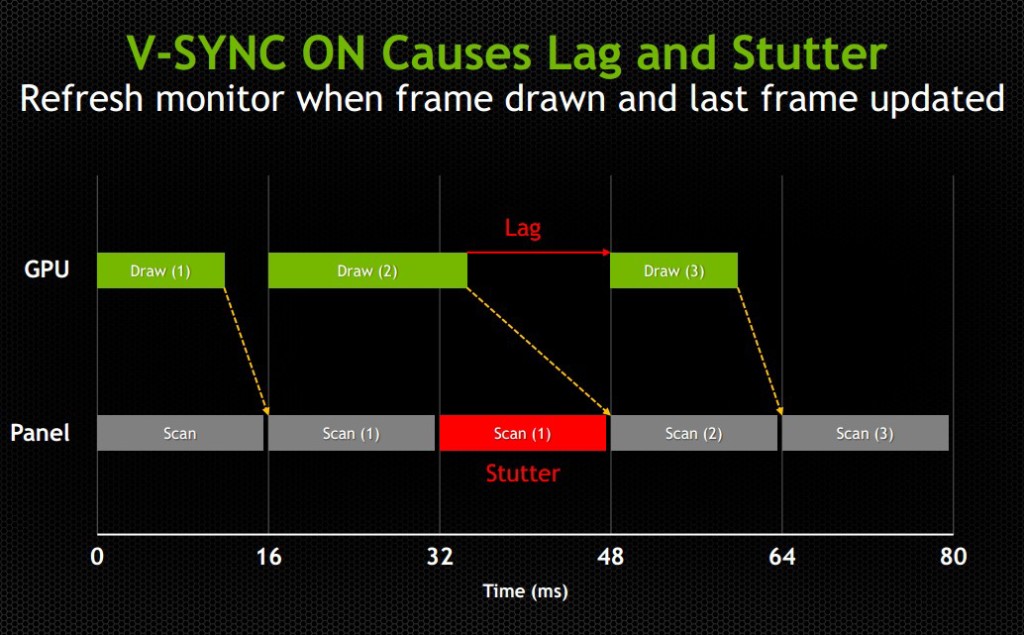

V-Sync

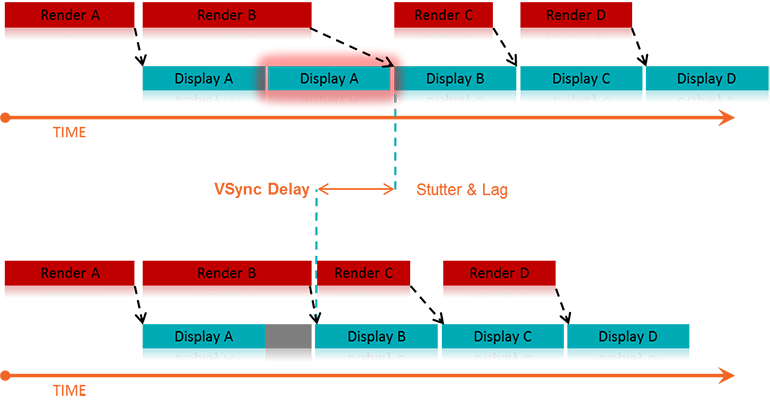

The premise of V-Sync is simple and intuitive – since screen tearing is caused by a GPU rendering frames out of time with the display, it could be solved by syncing the rendering/refresh time of the two. Displays run at 60 Hz, so when V-Sync is enabled, the GPU will only output 60 FPS, designed to match the display.

However, the problem here should be obvious, as it was discussed earlier in this article: just because you tell the GPU to render a new frame at a certain time, it doesn’t mean it will have it fresh out of the oven for you in time. This means that the GPU is struggling to match pace with the display, but in some cases it will easily match (such as in cases where it would normally render over 60 FPS), whereas in others it can’t keep up (such as in cases where it would normally render under 60 FPS).

While V-Sync fixes the issue of screen tearing, it adds a new problem: any framerate under 60 FPS means that you’ll be dealing with stuttering and lag onscreen, since the GPU will be choking on trying to match render time with the display’s refresh time. Without V-Sync on, 40-50 FPS is a perfectly reasonable and playable framerate, even if you experience tearing. With V-Sync on, 40-50 FPS is a laggy unplayable mess.

For some odd reason, while the idea to match the GPU render rate to the display’s refresh rate has been around since V-Sync was introduced years ago, no one thought to try the other way around until last year: matching the display’s refresh rate to the GPU’s render rate. Surely it seems like an obvious solution, but I suppose the idea that displays had to render at 60, 120, or 144 Hz was taken as a given.

The idea seems much simpler and straightforward. Matching a GPU’s render rate to a display’s refresh rate requires the computational horsepower on the GPU to keep pace with a display that does not care about how complex a frame is to render, and just chugs on at 60+ Hz. Matching a display’s refresh rate to a GPU’s render rate only requires the display to respond to a signal sent from the GPU to refresh when a new frame is sent.

This means that any framerate above 30 FPS should be extremely playable, resolving any tearing issues found when playing without V-Sync, and resolving any stuttering or lag issues caused by having lower than 60 FPS when playing with V-Sync. Suddenly the range of 30-60 FPS becomes much more playable, and in general gameplay and on screen rendering becomes much smoother

The modern solution

This solution comes in two forms: G-Sync – a proprietary design created by Nvidia; and FreeSync – an open standard designed by AMD.

G-Sync

G-Sync was introduced towards the end of 2013, as an add-on module for monitors (first available in early 2014). The G-Sync module is a board made using a proprietary design, which replaces the scaler in the display. However, the G-Sync module doesn’t actually implement a hardware scaler, leaving that to the GPU instead. Without getting into too much detail about how the hardware physically works, suffice it to say the G-Sync board is placed along the chain in a spot where it can determine when/how often the monitor draws the next frame. We’ll get a bit more in depth about it later on in this article

The problem with this solution is that this either requires the display manufacturer to build their monitors with the G-Sync module embedded, or requires the end user to purchase a DIY kit and install it themselves in a compatible display. In both cases, extra cost is added due to the necessary purchase of proprietary hardware. While it is effective, and certainly helps Nvidia’s bottom line, it dramatically increases the cost of the monitor. Another note of importance: G-Sync will only function on PCs equipped with Nvidia GPUs newer/higher end than the GTX 650 Ti. This means that anyone running AMD or Intel (integrated) graphics cards is out of luck.

FreeSync

FreeSync on the other hand was introduced by AMD at the beginning of 2014, and while Adaptive-Sync (will be explained momentarily) enabled monitors have been announced, they have yet to hit the market as of the time of this writing (January 2015). FreeSync is designed as an open standard, and in April of 2014, VESA (the Video Electronics Standards Association) adopted Adaptive-Sync as part of its specification for DisplayPort 1.2a.

Adaptive-Sync is a required component of AMD’s FreeSync, which allows the monitor to vary its refresh rate based on input from the GPU. DisplayPort is a universal and open standard, supported by every modern graphics card and most modern monitors. However, it should be noted that while Adaptive-Sync is considered part of the official VESA specification for DisplayPort 1.2a and 1.3, it is important to remember that it is optional. This is important because it means that not all new displays utilizing DisplayPort 1.3 will necessarily support Adaptive-Sync. We very much hope they do, as a universal standard would be great, but including Adaptive-Sync introduces some extra cost in building the display mostly in the way of validating and testing a display’s response.

To clarify the differences, Adaptive-Sync refers to the DisplayPort feature that allows for variable monitor refresh rates, and FreeSync refers to AMD’s implementation of the technology which leverages Adaptive-Sync in order to display frames as they are rendered by the GPU.

AMD touts the following as the primary reasons why FreeSync is better than G-Sync: “no licensing fees for adoption, no expensive or proprietary hardware modules, and no communication overhead.”

The first two are obvious, as they refer to the primary downsides of G-Sync – proprietary design requiring licensing fees, and proprietary hardware models that require purchase and installation. The third reason is a little more complex.

Differences in communication protocols

To understand that third reason, we need to discuss how Nvidia’s G-Sync model interacts with the display. We’ll provide a relatively high level overview of how that system works and compare it to FreeSync’s implementation.

The G-Sync module modifies the VBLANK interval. The VBLANK (vertical blanking interval) is the time between the display drawing the end of the final line of a frame and the start of the first line of a new frame. During this period the display continues showing the previous frame until the interval ends and it begins drawing the new one. Normally, the scaler or some other component in that spot along the chain determines the VBLANK interval, but the G-Sync module takes over that spot. While LCD panels don’t actually need a VBLANK (CRTs did), they still have one in order to be compatible with current operating systems which are designed to expect a refresh interval.

The G-Sync module modifies the timing of the VBLANK in order to hold the current image until the new one has been rendered by the GPU. The downside of this is that the system needs to poll repeatedly in order to check if the display is in the middle of a scan. In the case that it is, the system will wait until the display has finished its scan and will then have it render a new frame. This means that there is a measurable (if small) negative impact on performance. Those of you looking for more technical information on how G-Sync works can find it here.

FreeSync, as AMD pointed out, does not utilize any polling system. The DisplayPort Adaptive-Sync protocols allow the system to send the signal to refresh at any time, meaning there is no need to check if the display is mid-scan. The nice thing about this is that there’s no performance overhead and the process is simpler and more streamlined.

One thing to keep in mind about FreeSync is that, like Nvidia’s system, it is limited to newer GPUs. In AMD’s case, only GPUs from the Radeon HD 7000 line and newer will have FreeSync support.

(Author's Edit: To clarify, Radeon HD 7000 GPUs and up as well as Kaveri, Kabini, Temash, Beema, and Mullins APUs will have FreeSync support for video playback and power-saving scenarios [such as lower framerates for static images].

However, only the Radeon R7 260, R7 260X, R9 285, R9 290, R9 290X, and R9 295X2 will support FreeSync's dynamic refresh rates during gaming.)

Based on these things, one might come to the immediate conclusion that FreeSync is the better choice, and I would agree with you, though there are some pros and cons of both to consider (I’ll leave out the obvious benefits that both systems already share, such as smoother gameplay).

Pros and Cons

Nvidia G-Sync

Pros:

- Already available on a variety of monitors

- Great implementation on modern Nvidia GPUs

Cons:

- Requires proprietary hardware and licensing fees, and is therefore more expensive (the DIY kit costs $200, though it’s probably a lower cost to display OEMs)

- Proprietary design forces users to only use systems equipped with Nvidia GPUs

- Two-way handshake for polling has a negative impact on performance

AMD FreeSync

Pros:

- Uses Adaptive-Sync which is already part of the VESA standard for DisplayPort 1.2a and 1.3

- Open standard means no extra licensing fees, so the only extra costs for implementation are compatibility/performance testing (AMD stated that FreeSync enabled monitors would cost $100 less than their G-Sync counterparts)

- It can send a refresh signal at any time, there’s no communication overhead since there’s no need for a polling system

Cons:

- While G-Sync monitors have been available for purchase since early 2014, there won’t be any FreeSync enabled monitors until March 2015

- Despite it being an open standard, neither Intel nor Nvidia has even announced support to make use of the system (or even Adaptive-Sync for that matter), meaning for now it’s an AMD-only affair

Final thoughts

My suspicion is that FreeSync will eventually win out, despite the fact that there’s already some market share for G-Sync. The reason for this is that Nvidia does not account for 100% of the GPU market, and G-Sync is limited only to Nvidia systems. Despite reluctance from Nvidia, it will likely eventually support Adaptive-Sync and will release drivers that will make use of it. This is especially true because displays with Adaptive-Sync will be significantly more affordable than G-Sync enabled monitors, and will perform the same or better.

Eventually Nvidia will lose this battle, and when they finally give in, that will be when consumers benefit the most. Until then, if you have an Nvidia GPU and want smooth gameplay now, there are plenty of G-Sync enabled monitors on the market now. If you have an AMD GPU, you’ll see your options start to open up within the next couple of months. In any case, both systems provide a tangible improvement for gaming and really any task that utilizes real-time rendering, so either way in the battle between G-Sync vs FreeSync, you win.

Halo 5: Guardians Beta - A Look At What's To Come

January 12, 2015343 industries,halo 5: guardians,xbox,multiplayer beta,not operator,bungie,strongholds,halo 5,343,halo beta,microsoft,guardians,notoperator,xbox one,slayer,breakout,halo 5 betaGaming,Gaming Analysis

Developer 343 Industries is coming off, let’s face it, an abysmal launch of Halo: The Master Chief Collection for the Xbox One that still experiences matchmaking issues to date. 343 is aiming to use the Halo 5: Guardians Multiplayer Beta to be just that, a beta in an effort to prepare for retail launch and avoid repeating the mistakes made with Halo: MCC. With plenty of time to go before Halo 5 is released to the masses later this year and consumes the lives of Xbox gamers (no official word on a release date yet, but it will most likely be announced at this year’s E3), the data and player feedback gathered from the beta actually has a chance to be utilized by 343 and make its way into the final product. This is in direct contrast to most other console betas, which serve more of a marketing purpose as a glorified demo than as a way to actually improve the game before launch (anyone still remember Titanfall?).

Also, as a point of note: while the beta runs at 720p and 60 FPS, this has been confirmed by 343 to not be final resolution Halo 5 will run at.

Abilities

The Halo 5: Guardians Multiplayer Beta, only accessible to those who have a copy of the Master Chief Collection, runs from December 29th to January 18th and concentrates exclusively on “arena” style multiplayer matches. For the uninitiated, this translates to two teams of four Spartans battling it out in a variety of small-scale maps that cater to fast-paced combat, which is enhanced by perhaps the biggest change in the series – Spartan abilities. Not to be confused with the controversial loadouts and armor abilities from Halo Reach and Halo 4, all Spartan abilities are innately available to all players to use as they please in order to add an additional strategic dimension to the Halo gunfighting experience. In Halo 5, your Spartan now has the following abilities:

- Smart Scope – An enhanced aim feature applicable to every gun in the game. For example, Smart Scoping with the Assault Rifle zooms in and two snazzy looking semi-circles pop up on your HUD, whereas doing it with the SMG allows you to aim down the weapon’s sights. Note that doing this will improve the spread of bullets fired from your guns, as tested in the video below. Also, using Smart Scope while in the air will cause your Spartan to hover in place for a short period of time.

- Sprint – Hitting the sprint button allows your Spartan to run for an unlimited duration, though your shields will not recharge until you stop sprinting.

- Slide – Crouching while sprinting will send you into a low slide, which I’ve only ever seen one other Spartan do in all of my time playing the beta so far. Maybe players will get more use out of it once we get a shotgun to slide around with?

- Thruster Pack – A quick press of the Thruster Pack button gives your Spartan a short range dash that can be used going forward, backward, left, right, and even mid-air, but has about a four second delay before it can be used again. Try boosting left or right during one-on-one firefights to throw off your opponent’s aim, or use it to dash to safety without having to sprint so your shields can recharge sooner.

- Clamber – Pretty simple concept here, but new nonetheless to the Halo series. Pressing the Clamber button allows for your Spartan to climb up ledges that are within reach. Clambering, in conjunction with sprinting and the Thruster Pack, can have you zipping around the map in no time.

- Spartan Charge – After sprinting for a short period of time, your target reticle will change from the usual circle in the middle of your screen to two brackets. Once this happens, a press of the melee button causes your Spartan to perform a flying knee into anyone foolish enough to get in the way. One hit on an enemy from behind with a Spartan Charge will result in their immediate death, though a charge from the front or side will only remove your opponent’s shields, assuming they have taken no previous damage.

- Ground Pound – Seemingly the most gimmicky ability in game, the Ground Pound is actually a pretty useful tactic once you’ve mastered it. A direct hit on an unsuspecting foe will nab you a kill, whereas anything else will only take down their shields and leave you exposed (again, assuming no previous damage taken). Try baiting unsuspecting enemies into your location when you have the high ground, jump up, hold down the Ground Pound button, and teach that other Spartan what it feels like to be a Goomba under the boot of a certain mustachioed Italian plumber.

Also new in Halo 5 is Spartan chatter, in-game dialogue that your Spartan teammates make, which helps to facilitate communication that might not otherwise be present if one your teammates isn’t mic’d up. Hearing warnings of enemy snipers, for example, is a nice touch that definitely saved me in the beta. The possibility of being able to customize voices would be a fun feature in the final game, and was a welcome touch in Halo Reach’s version of Firefight. However, this may just be me being blinded by my desire to have Sergeant Johnson in the game.

Weapons

On the weapons front, the fan favorite Battle Rifle returns, in addition to the other popular Halo mainstays like the DMR, Pistol, and Sniper Rifle. A new gun we got to mess around with in the beta, appropriately called the “Hydra”, is a multi-shot rocket launcher that locks onto enemy Spartans and takes two direct hits to get a kill. Where things to start to get interesting is with the addition of the new “legendary” weapons. On the multiplayer map Truth, affectional dubbed “Midship 2.0” by 343, you’ll find an Energy Sword called the “Prophet’s Bane”. Aside from having a badass name and yellowish hue, the Prophet’s Bane actually increases the movement speed of its Spartan host, allowing the wielder to hunt down enemies with swift precision. My prediction is that these weapons are going to be the next evolution of Halo power-ups like Overshield and Active Camoflage. Personally, one of the most exciting aspect of these legendary weapons for now is speculating what others we’ll have in our arsenal in the final game. I’ve had a couple ideas for guns that could show up in the final game: maybe a shotgun that gives the player an overshield called “The Chief”? Or how about “Wetwork”, an SMG that grants active camouflage? Only time will tell, but for now, post in the comments below or tweet at us @NotOperating with your own legendary weapon ideas!

Game Modes

Slayer, Halo’s equivalent of Team Deathmatch, makes its return in Halo 5, in addition to two brand new modes: Breakout and Strongholds.

Breakout, set in the Speedball-like maps Trench and Crossfire, is a round based game where each player has no shields and no motion tracker. The player also starts with an SMG, pistol, one grenade, and most importantly, one life. The team with the last Spartan standing at the end of the round wins, and the first team to win five rounds wins the match. Having no shields causes you to change strategy from typical Slayer encounters to what oftentimes results in you getting one-shotted by a grenade from across the map or picked off by one of the Battle Rifles up for grabs for each team. However, if you manage to survive the initial ‘nade salvo, or make it to a safe spot before the BRs are up on their perches, Breakout provide some fun, yet stressful gameplay, especially when you are the lone Spartan against a few survivors on the enemy team.

Strongholds, a variant on the Territories mode of yore (similar to Domination in Call of Duty), has Spartans fighting over three control points. Concurrently holding two of these capture sites will allow your team to start racking up points every second. Having only one stronghold means your team won’t be getting any points at all. First team to 100 points wins the game.

As for the new ranking system in these modes, completing ten matches will place you in one of seven tiers, similar to Starcraft II’s ladder system (which isn’t surprising, considering Josh Menke helped design both systems). The tiers, in ascending order, are: Iron, Bronze, Silver, Gold, Onyx, Semi-Pro, and Pro. No need to be disappointed if you feel your placement isn’t representative of your actual skill level. Winning matches after those initial 10 will slowly but surely move you up to the next rank. Conversely, losing will drop your level down. While I can’t complain about being able to brag to my friends that I am a Pro in Strongholds or a Semi-Pro in Slayer, a little more transparency from 343 Industries as to what factors determine the initial ranking would be a welcome addition.